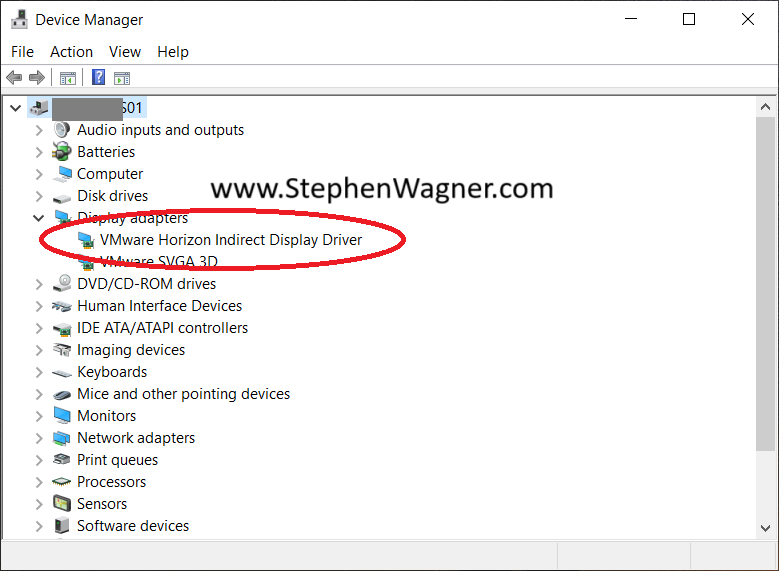

Normally, any VMs that are NVIDIA vGPU enabled have to be manually migrated with manual vMotion if a host is placed in to maintenance mode, to evacuate the host. While we may have grown accustomed to this, there is a better way, with vGPU Enabled VM DRS Evacuation during Maintenance mode!

A new feature that was introduced with vSphere 7.0 U3f, was the ability to configure and allow automatic vMotion of VMs with vGPUs, meaning that DRS can now migrate your VDI and AI/ML vGPU enabled workloads when hosts are placed in to maintenance mode. This also allows you to streamline remediation with vLCM when updating vGPU enabled hosts running vGPU enabled VMs.

Additionally, as of vSphere 8.0 U2, DRS can now estimate the STUN times required for vMotion of vGPU enabled VMs, and control whether automatic DRS vMotion’s are allowed. This STUN time limit can be set buy an administrator.

Enable automatic vMotion evacuation of vGPU enabled VMs

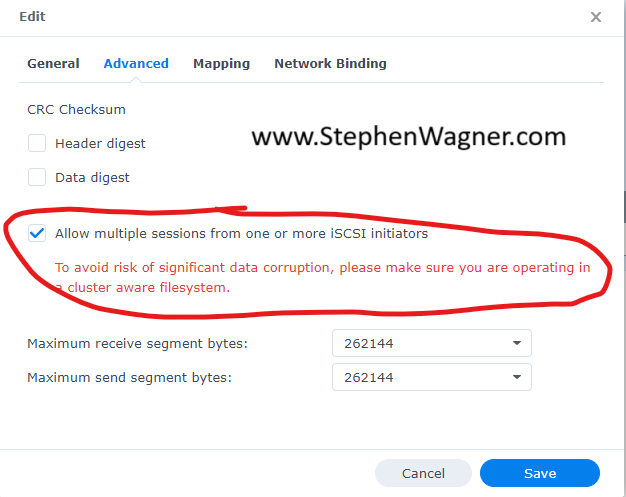

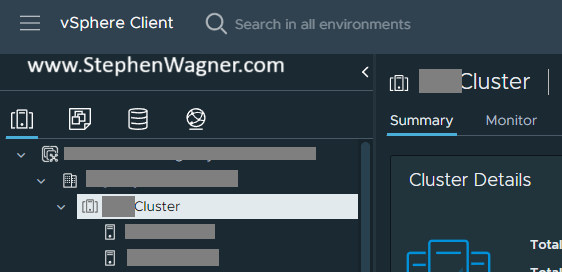

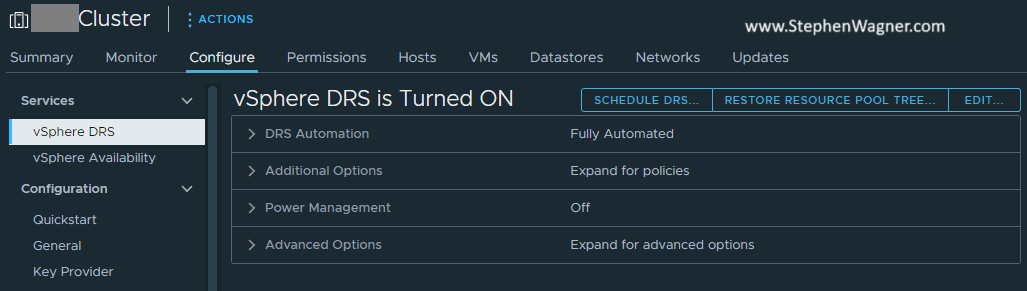

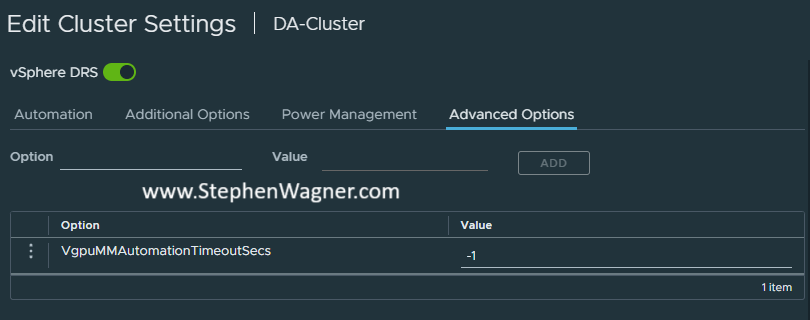

To enable the automatic vMotion of vGPU enabled VMs on your vSphere Cluster:

- Navigate to your vSphere Cluster.

- Click on the “Configure” Tab, and then select “vSphere DRS”, and click “Edit”.

- Navigate to the “Advanced Options” tab.

- Add “VgpuMMAutomationTimeoutSecs” and set to “-1”.

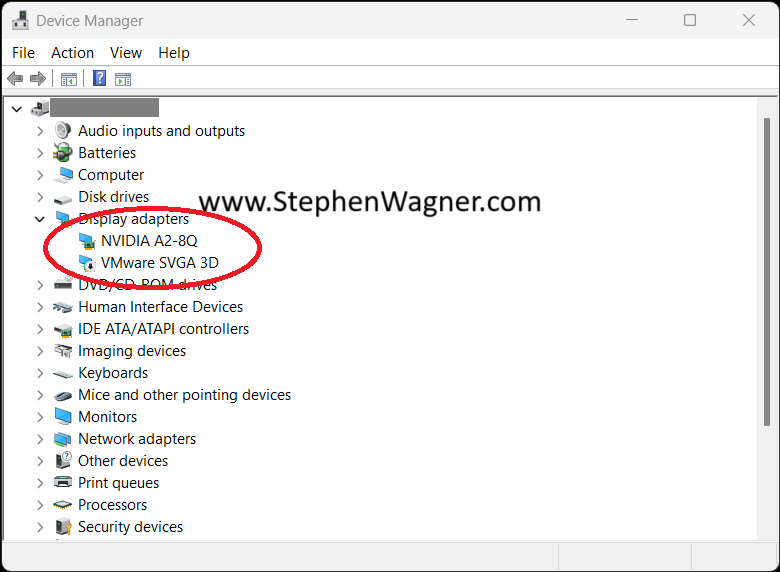

After performing the above, when you place a host with vGPU enabled Virtual Machines in to Maintenance Mode, vSphere DRS will evacuate and migrate the VMs to other hosts in the cluster that have the required hardware.

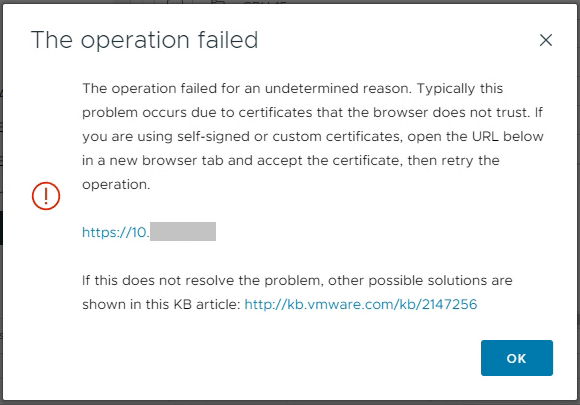

If you attempt to place a host in to Maintenance Mode without enabling automatic vMotion of vGPU enabled VMs, it will fail with the error: “DRS failed to generate a vMotion recommendation for a virtual machine on a host entering Maintenance Mode“.

Enable and Configure vGPU STUN Time Estimate and Limits

If you are running vSphere 8U2 or higher, you can enable vGPU STUN time estimation and limits for DRS on the vGPU enabled cluster. Similar to the instructions above, we can add and configure two variables to the vSphere DRS cluster “Advanced Options”.

To enable STUN time estimation, add PassthroughDrsAutomation and set to “1”.

To override the default vMotion STUN time limit of 100 seconds, add VmDevicesStunTimeTolerated and set it to your preferred maximum number of seconds. Alternatively, you can set this limit Per VM by navigating to the VM in vSphere and adding this variable under the “VM Options” “Advanced Settings” section.