Today we’re going to dive in to how to modify or add to the new default user registry on Windows. This is the registry that is provisioned to new users when they log on to Windows for the first time.

These steps are required to make modifications to the registry, either to configure the users environment, and/or configure registry settings required for applications that may be install on the windows system that require configuration for a seamless user experience.

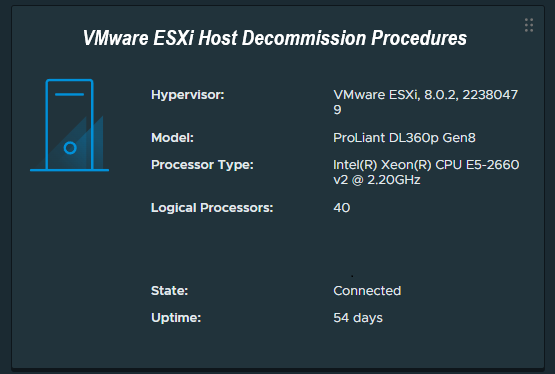

I regularly use this method to modify the default user registry on non-persistent VDI golden images for use with Omnissa Horizon (formerly VMware Horizon), however this can be used on traditional Windows systems (non-VDI), and/or other VDI platforms such as Citrix, AVD, and more!

Load the Default User Registry Hive

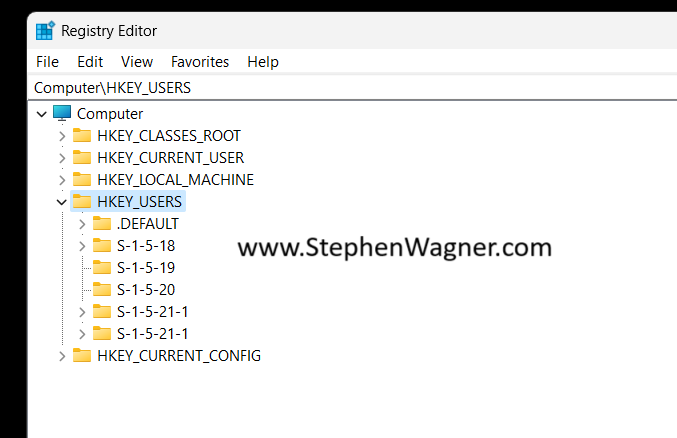

Let’s go ahead and get started! We’ll need to open “regedit” with administrative credentials (either logon as an admin, or “Run As” administrator). Then we’ll expand “HKEY_USERS”.

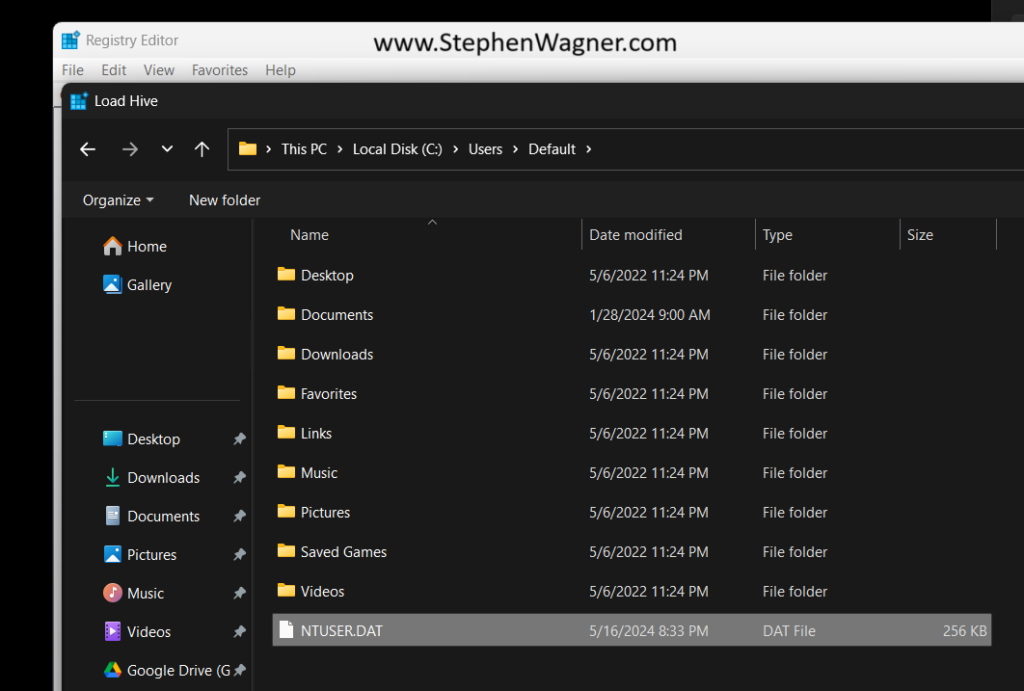

Next, we’ll go to “File” and then “Load Hive”. This will open a Windows File Explorer. We’ll navigate to the following directory:

C:\Users\Default\NTUSER.DAT

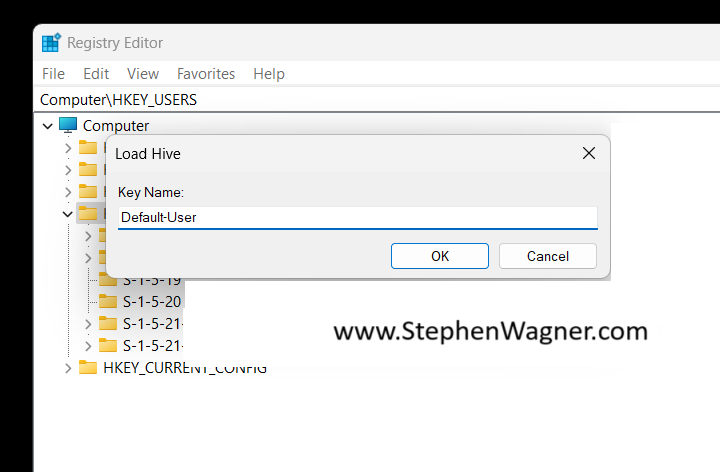

Once we select the “NTUSER.DAT” file, we’ll be prompted to load the hive and give it a key name. You can call it whatever you’d like (as long as it doesn’t conflict with an existing key), but for this example I’ll call it “Default-User”.

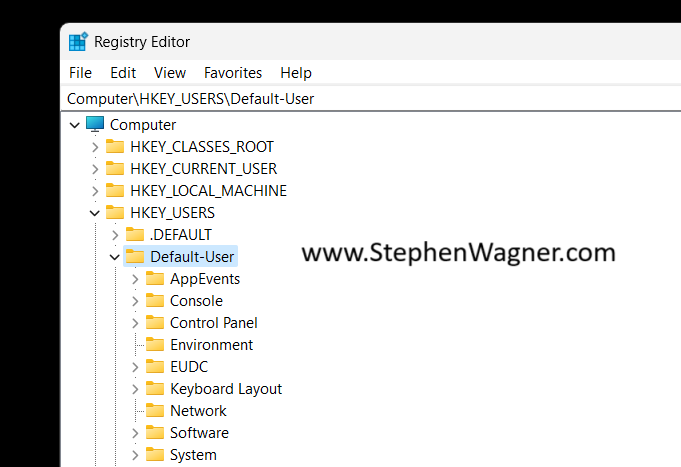

You’ll now notice that the Default User’s “HKEY_CURRENT_USER”, is now loaded as the hive you specified above, in our case it’s loaded as “Default-User”.

You can now make any modifications to the default users registry, including importing keys. If you’re using a “.reg” file, make sure you update it to reflect the registry hive location you’ve loaded.

Unload the Default User Registry Hive

Once you’ve made the modifications to the default user registry hive, whenever new users log on, they will be provisioned this hive.

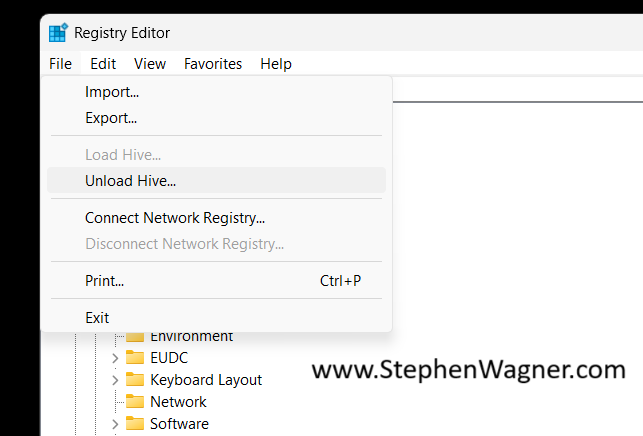

We can now go ahead and unload the registry hive.

We’ll select the “Default-User” key (or whatever you called it), and select “Unload Hive”.

This will properly and gracefully close the default users registry hive.