When troubleshooting connectivity issues with your vMotion network (or vMotion VLAN), you may notice that you’re unable to ping using the ping or vmkping command on your ESXi and VMware hosts.

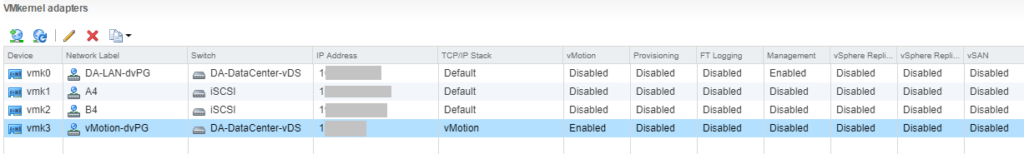

This occurs when you’re suing the vMotion TCP/IP stack on your vmkernel (vmk) adapters that are configured for vMotion.

This also applies if you’re using long distance vMotion (LDVM).

Why

The vMotion TCP/IP stack requires special syntax for ping and ICMP tests on the vmk adapters.

Above is an example where a vmk adapter (vmk3) is configured to use the vMotion TCP/IP stack.

How

To “ping” and test your vMotion network that uses the vMotion TCP/IP stack, you’ll need to use the special command below:

esxcli network diag ping -I vmk1 --netstack=vmotion -H ip.add.re.ss

In the command above, change “vmk1” to the vmkernel adapter you want to send the pings from. Additionally, change “ip.add.re.ss” to the IP address of the host you want to ping.

Using this method, you can fully verify network connectivity between the vMotion vmks using the vMotion stack.

Additional information and examples can be found at https://kb.vmware.com/s/article/59590.