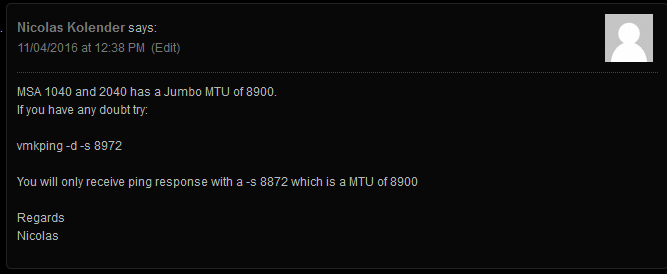

Yesterday, I had a reader (Nicolas) leave a comment on one of my previous blog posts bringing my attention to the MTU for Jumbo Frames on the HPE MSA 2040 SAN.

Since I first started working with the MSA 2040. Looking at numerous HPE documents outlining configuration and best practices, the documents did confirm that the unit supported Jumbo Frames. However, the documentation on the MTU was never clearly stated and can be confusing. I was under the assumption that the unit supported 9000 MTU, while reserving 100 bytes for overhead. This is not necessarily the case.

Nicolas chimed in and provided details on his tests which confirmed the HPE MSA 2040 does actually have a working MTU of 8900. In my configuration I did the tests (that Nicolas outlined), and confirmed that the MTU would cause packet fragmentation if the MTU was greater than 8900.

ESXi vmkping usage: https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1003728

This is a big discovery because packet fragmentation will not only degrade performance, but flood the links with lots of packet fragmentation.

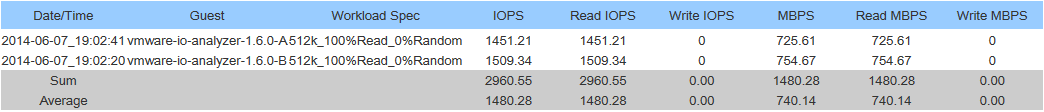

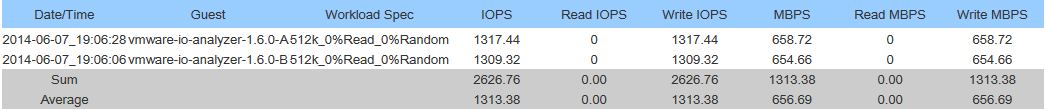

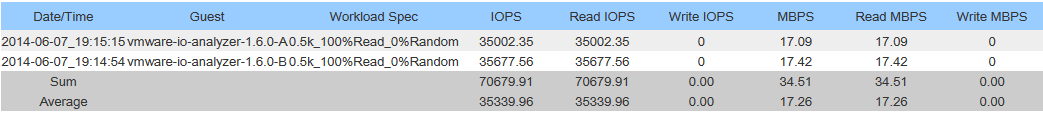

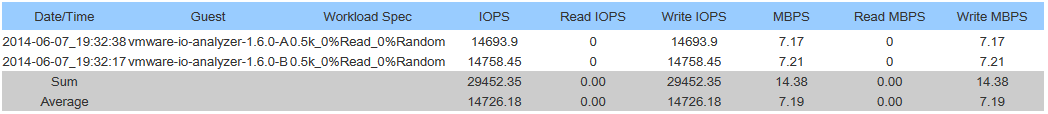

I went ahead and re-configured my ESXi hosts to use an MTU of 8900 on the network used with my SAN. This immediately created a MASSIVE performance increase (both speed, and IOPS). I highly recommend that users of the MSA 2040 SAN confirm this on their own, and update the MTUs as they see fit.

Also, this brings up another consideration. Ideally, on a single network, you want all devices to be running the same MTU. If your MSA 2040 SAN is on a storage network with other SAN devices (or any other device), you may want to configure all of them to use the MTU of 8900 if possible (and of course, don’t forget your servers).

A big thank you to Nicolas for pointing this out!