If you’re like me and use an older Nvidia GRID K1 or K2 vGPU video card for your VDI homelab, you may notice that when using VMware Horizon that VMware Blast h264 encoding is no longer being offloaded to the GPU and is instead being encoded via the CPU.

The Problem

Originally when an environment was configured with an Nvidia GRID K1 or K2 card, not only does the card provide 3D acceleration and rendering, but it also offloads the VMware BLAST h264 stream (the visual session) so that the CPU doesn’t have to. This results in less CPU usage and provides a streamlined experience for the user.

This functionality was handled via NVFBC (Nvidia Frame Buffer Capture) which was part of the Nvidia Capture SDK (formerly known as GRID SDK). This function allowed the video card to capture the video frame buffer and encode it using NVENC (Nvidia Encoder).

Ultimately after spending hours troubleshooting, I learned that NVFBC has been deprecated and is no longer support, hence why it’s no longer functioning. I also checked and noticed that tools (such as nvfbcenable) were no longer bundled with the VMware Horizon agent. One can assume that the agent doesn’t even attempt to check or use this function.

Symptoms

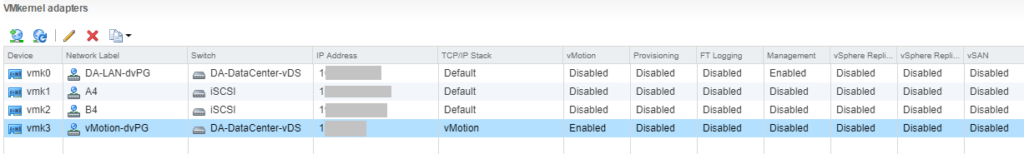

Before I was aware of this, I noticed that while 3D Acceleration and graphics were functioning, I was experiencing high CPU usage. Upon further investigation I noticed that my VMware BLAST sessions were not offloading h264 encoding to the video card.

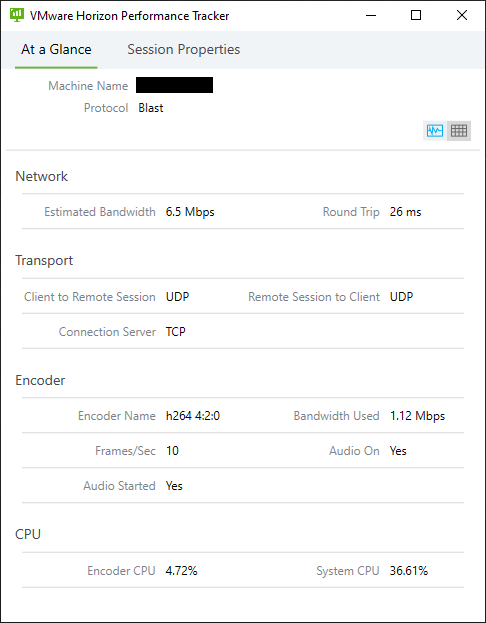

You’ll notice above that under the “Encoder” section, the “Encoder Name” was listed as “h264 4:2:0”. Normally this would say “NVIDIA NvEnc H264” (or “NVIDIA NvEnc HEVC” on newer cards) if it was being offloaded to the GPU.

Looking at a VMware Blast session (Blast-Worker-SessionId1.log), the following lines can be seen.

[INFO ] 0x1f34 bora::Log: NvEnc: VNCEncodeRegionNvEncLoadLibrary: Loaded NVIDIA SDK shared library "nvEncodeAPI64.dll" [INFO ] 0x1f34 bora::Log: NvEnc: VNCEncodeRegionNvEncLoadLibrary: Loaded NVIDIA SDK shared library "nvml.dll" [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetEncoderCapacity: 127 [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetProcessUtilization: 127 [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetGridLicensableFeatures: 127 [INFO ] 0x1f34 bora::Log: NvEnc: VNCEncodeRegionNvEncLoadLibrary: Some NVIDIA nvml functions unavailable, unloading [INFO ] 0x1f34 bora::Log: NvEnc: VNCEncodeRegionNvEncUnloadLibrary: Unloading NVIDIA SDK shared library "nvEncodeAPI64.dll" [INFO ] 0x1f34 bora::Log: NvEnc: VNCEncodeRegionNvEncUnloadLibrary: Unloading NVIDIA SDK shared library "nvml.dll" [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetEncoderCapacity: 127 [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetProcessUtilization: 127 [WARN ] 0x1f34 bora::Warning: GetProcAddress: Failed to resolve nvmlDeviceGetGridLicensableFeatures: 127

You’ll notice it tries to load the proper functions, however it fails.

The Solution

Unfortunately the only solution is to upgrade to newer or different hardware.

The GRID K1 and GRID K2 cards have reached their EOL (End of Life) and are no longer support. The drivers are not being maintained or updated so I doubt they will take advantage of the newer frame buffer capture functions of Windows 10.

Newer hardware and solutions have incorporated this change and use a different means of frame buffer capture.

To resolve this in my own homelab, I plan to migrate to an AMD FirePro S7150x2.