In May of 2023, NVIDIA released the NVIDIA GPU Manager for VMware vCenter. This appliance allows you to manage your NVIDIA vGPU Drivers for your VMware vSphere environment.

Since the release, I’ve had a chance to deploy it, test it, and use it, and want to share my findings.

In this post, I’ll cover the following (click to skip ahead):

- What is the NVIDIA GPU Manager for VMware vCenter

- How to deploy and configure the NVIDIA GPU Manager for VMware vCenter

- Deployment of OVA

- Configuration of Appliance

- Using the NVIDIA GPU Manager to manage, update, and deploy vGPU drivers to ESXi hosts

Let’s get to it!

What is the NVIDIA GPU Manager for VMware vCenter

The NVIDIA GPU Manager is an (OVA) appliance that you can deploy in your VMware vSphere infrastructure (using vCenter and ESXi) to act as a driver (and update) repository for vLCM (vSphere Lifecycle Manager).

In addition to acting as a repo for vLCM, it also installs a plugin on your vCenter that provides a GUI for browsing, selecting, and downloading NVIDIA vGPU host drivers to the local repo running on the appliance. These updates can then be deployed using LCM to your hosts.

In short, this allows you to easily select, download, and deploy specific NVIDIA vGPU drivers to your ESXi hosts using vLCM baselines or images, simplifying the entire process.

Supported vSphere Versions

The NVIDIA GPU Manager supports the following vSphere releases (vCenter and ESXi):

- VMware vSphere 8.0 (and later)

- VMware vSphere 7.0U2 (and later)

The NVIDIA GPU Manager supports vGPU driver releases 15.1 and later, including the new vGPU 16 release version.

How to deploy and configure the NVIDIA GPU Manager for VMware vCenter

To deploy the NVIDIA GPU Manager Appliance, we have to download an OVA (from NVIDIA’s website), then deploy and configure it.

See below for the step by step instructions:

Download the NVIDIA GPU Manager

- Log on to the NVIDIA Application Hub, and navigate to the “NVIDIA Licensing Portal” (https://nvid.nvidia.com).

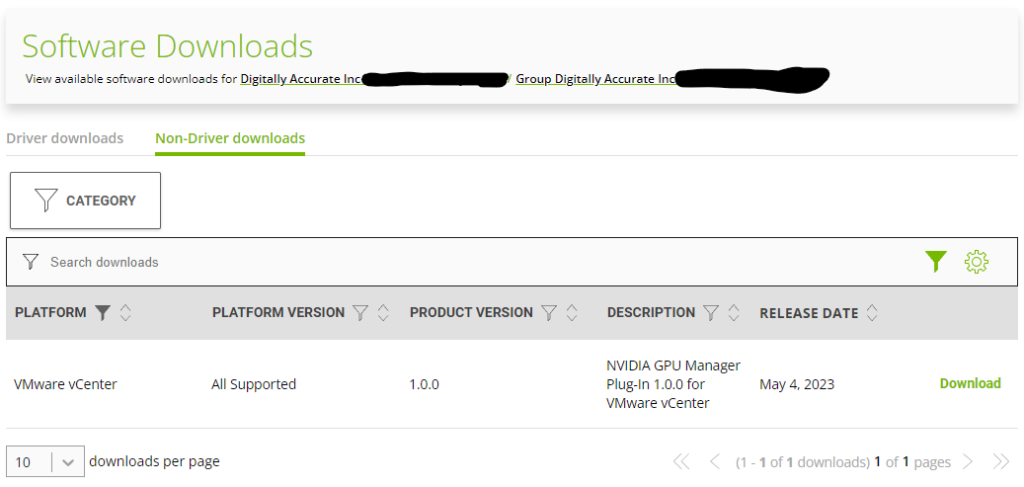

- Navigate to “Software Downloads” and select “Non-Driver Downloads”

- Change Filter to “VMware vCenter” (there is both VMware vSphere, and VMware vCenter, pay attention to select the correct).

- To the right of “NVIDIA GPU Manager Plug-in 1.0.0 for VMware vCenter”, click “Download” (see below screenshot).

After downloading the package and extracting, you should be left with the OVA, along with Release Notes, and the User Guide. I highly recommend reviewing the documentation at your leisure.

Deploy and Configure the NVIDIA GPU Manager

We will now deploy the NVIDIA GPU Manager OVA appliance:

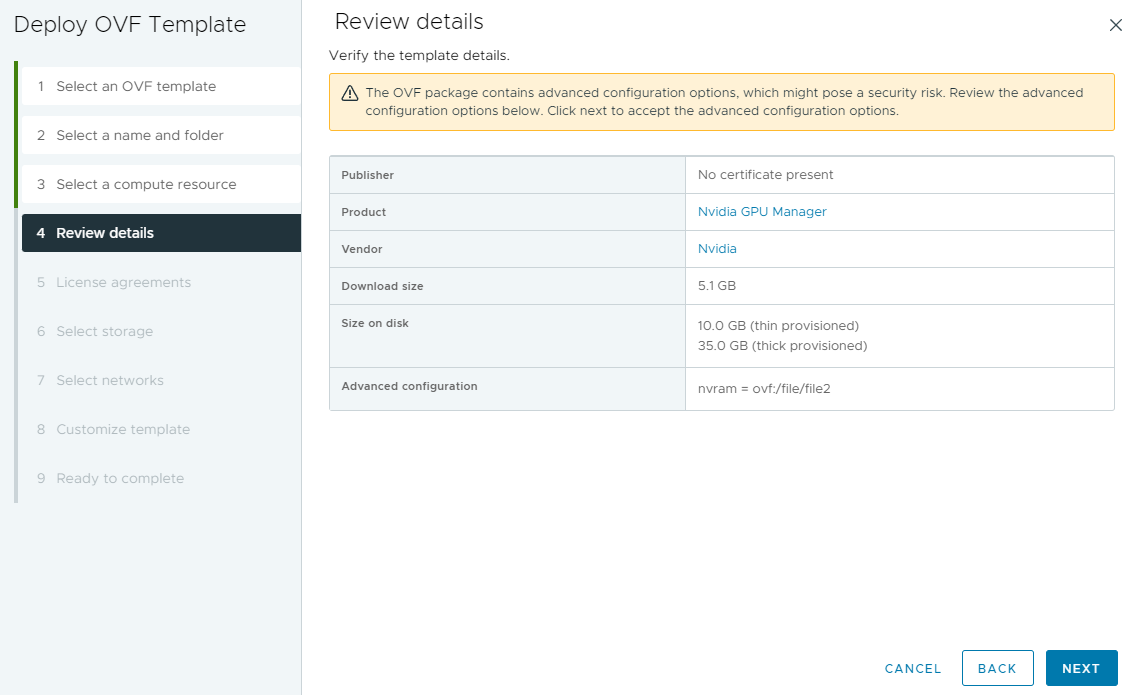

- Deploy the OVA to either a cluster with DRS, or a specific ESXi host. In vCenter either right click a cluster or host, and select “Deploy OVF Template”. Choose the GPU Manager OVA file, and continue with the wizard.

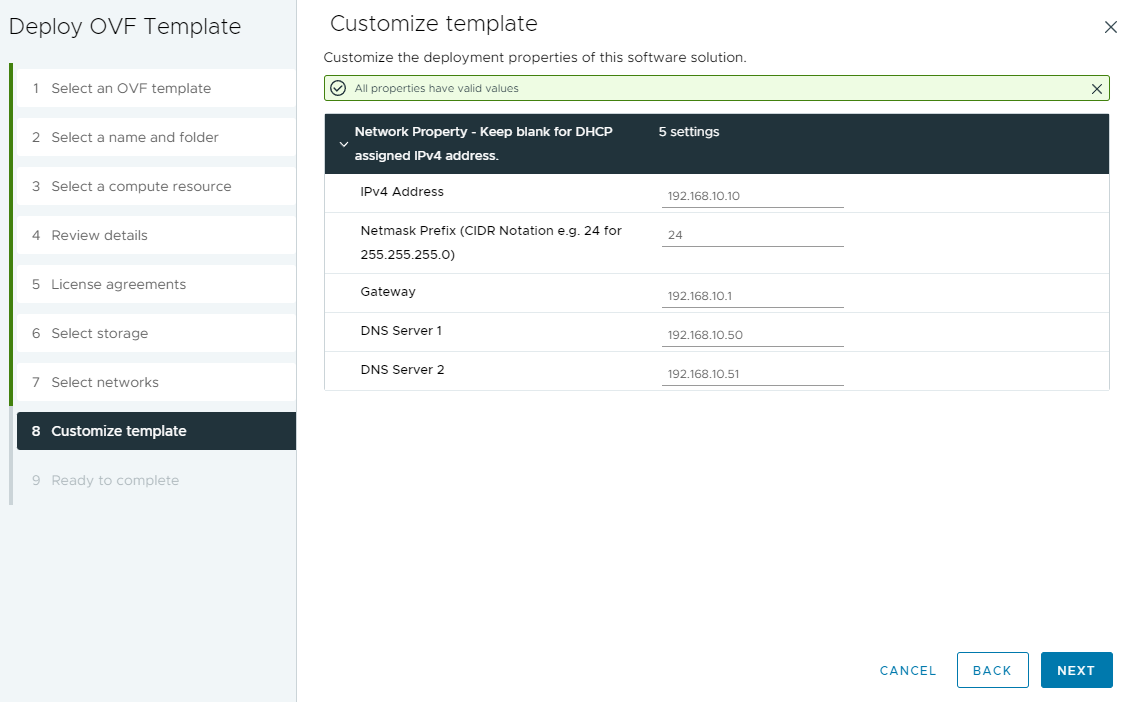

- Configure Networking for the Appliance

- You’ll need to assign an IP Address, and relevant networking information.

- I always recommend creating DNS (forward and reverse entries) for the IP.

- Finally, power on Appliance.

We must now create a role and service account that the GPU Manager will use to connect to the vCenter server.

While the vCenter Administrator account will work, I highly recommend creating a service account specifically for the GPU Manager that only has the required permissions that are necessary for it to function.

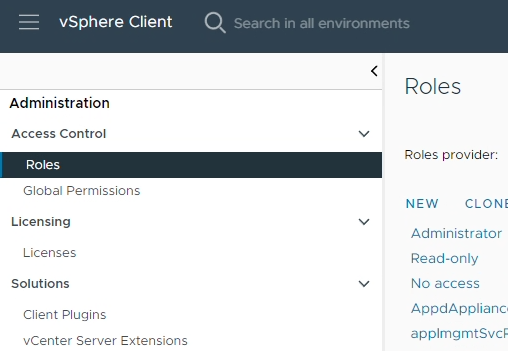

- Log on to your vCenter Server

- Click on the hamburger menu item on the top left, and open “Administration”.

- Under “Access Control” select Roles.

- Select New to create a new role. We can call it “NVIDIA Update Services”.

- Assign the following permissions:

- Extension Privileges

- Register Extension

- Unregister Extension

- Update Extension

- VMware vSphere Lifecycle Manager Configuration Priveleges

- Configure Service

- VMware vSphere Lifecycle Manager Settings Priveleges

- Read

- Certificate Management Privileges

- Create/Delete (Admins priv)

- Create/Delete (below Admins priv)

- ***PLEASE NOTE: The above permissions were provided in the documentation and did not work for me (resulted in an insufficient privileges error). To resolve this, I chose “Select All” for “VMware vSphere Lifecycle Manager”, which resolved the issue.***

- Extension Privileges

- Save the Role

- On the left hand side, navigate to “Users and Groups” under “Single Sign On”

- Change the domain to your local vSphere SSO domain (vsphere.local by default)

- Create a new user account for the NVIDIA appliance, as an example you could use “nvidia-svc”, and choose a secure password.

- Navigate to “Global Permissions” on the left hand side, and click “Add” to create a new permission.

- Set the domain, and choose the new “nvidia-svc” service account we created, and set the role to “NVIDIA Update Services”, and check “Propagate to Children”.

- You have now configured the service account.

Now, we will perform the initial configuration of the appliance. To configure the application, we must do the following:

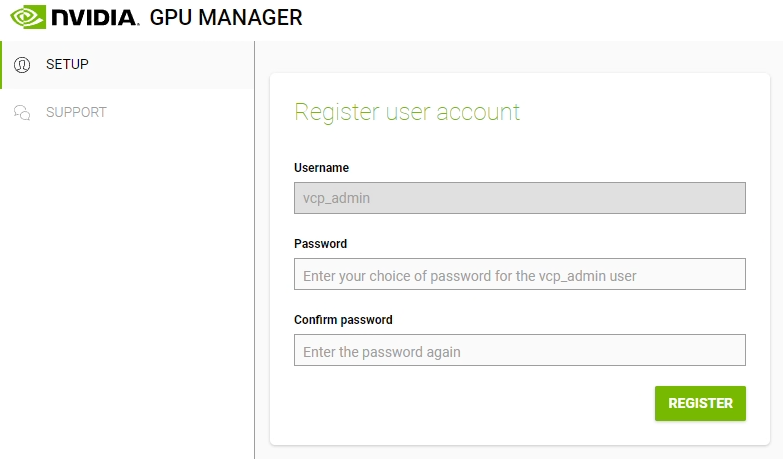

- Access the appliance using your browser and the IP you configured above (or FQDN)

- Create a new password for the administrative “vcp_admin” account. This account will be used to manage the appliance.

- A secret key will be generated that will allow the password to be reset, if required. Save this key somewhere safe.

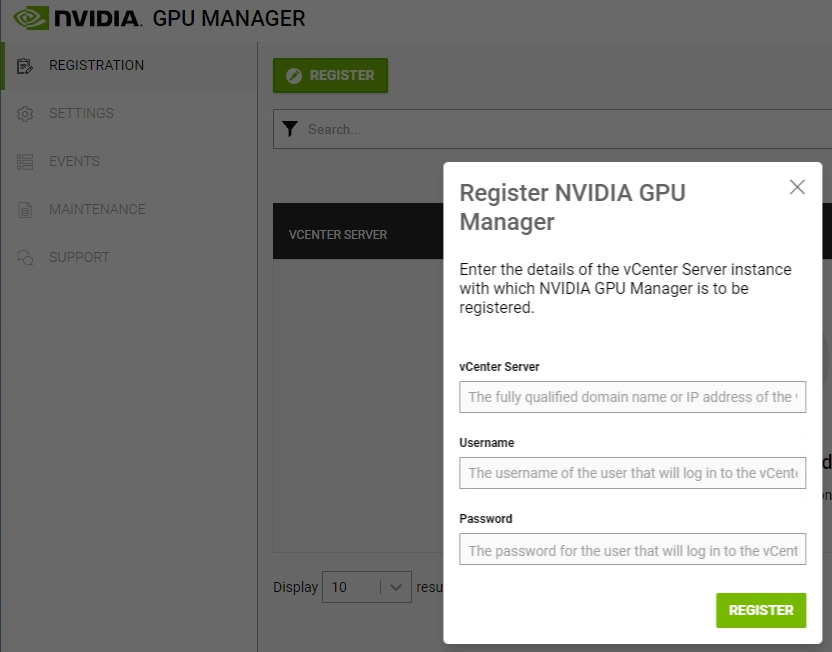

- We must now register the appliance (and plugin) with our vCenter Server. Click on “REGISTER”.

- Enter the FQDN or IP of your vCenter server, the NVIDIA Service account (“nvidia-svc” from example), and password.

- Once the GPU Manager is registered with your vCenter server, the remainder of the configuration will be completed from the vCenter GPU.

- The registration process will install the GPU Manager Plugin in to VMware vCenter

- The registration process will also configure a repository in LCM (this repo is being hosted on the GPU manager appliance).

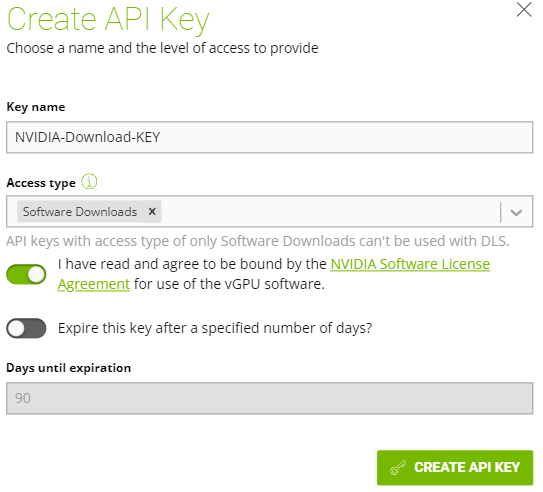

We must now configure an API key on the NVIDIA Licensing portal, to allow your GPU Manager to download updates on your behalf.

- Open your browser and navigate to https://nvid.nvidia.com. Then select “NVIDIA LICENSING PORTAL”. Login using your credentials.

- On the left hand side, select “API Keys”.

- On the upper right hand, select “CREATE API KEY”.

- Give the key a name, and for access type choose “Software Downloads”. I would recommend extending the key validation time, or disabling key expiration.

- The key should now be created.

- Click on “view api key”, and record the key. You’ll need to enter this in later in to the vCenter GPU Manager plugin.

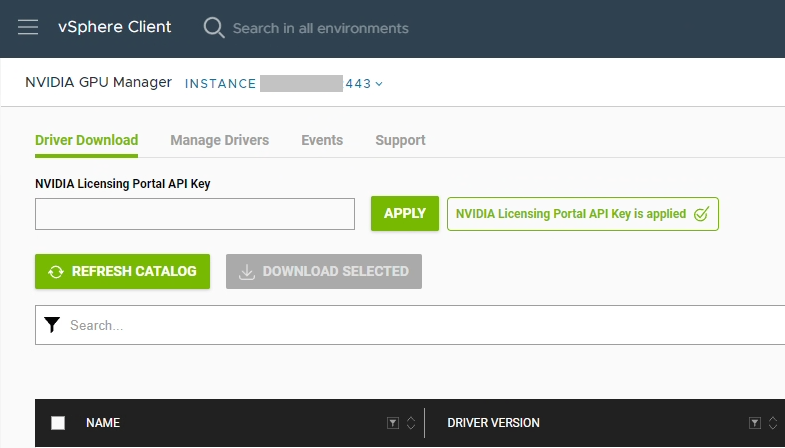

And now we can finally log on to the vCenter interface, and perform the final configuration for the appliance.

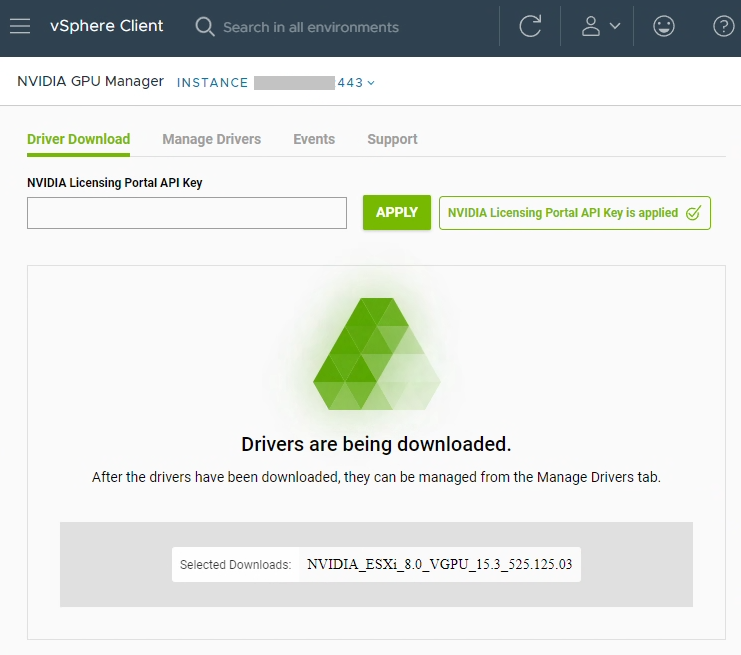

- Log on to the vCenter client, click on the hamburger menu, and select “NVIDIA GPU Manager”.

- Enter the API key you created above in to the “NVIDIA Licensing Portal API Key” field, and select “Apply”.

- The appliance should now be fully configured and activated.

- Configuration is complete.

We have now fully deployed and completed the base configuration for the NVIDIA GPU Manager.

Using the NVIDIA GPU Manager to manage, update, and deploy vGPU drivers to ESXi hosts

In this section, I’ll be providing an overview of how to use the NVIDIA GPU Manager to manage, update, and deploy vGPU drivers to ESXi hosts. But first, lets go over the workflow…

The workflow is a simple one:

- Using the vCenter client plugin, you choose the drivers you want to deploy. These get downloaded to the repo on the GPU Manager appliance, and are made available to Lifecycle Manager.

- You then use Lifecycle Manager to deploy the vGPU Host Drivers to the applicable hosts, using baselines or images.

As you can see, there’s not much to it, despite all the configuration we had to do above. While it is very simple, it simplifies management quite a bit, especially if you’re using images with Lifecycle Manager.

To choose and download the drivers, load up the plugin, use the filters to filter the list, and select your driver to download.

As you can see in the example, I chose to download the vGPU 15.3 host driver. Once completed, it’ll be made available in the repo being hosted on the appliance.

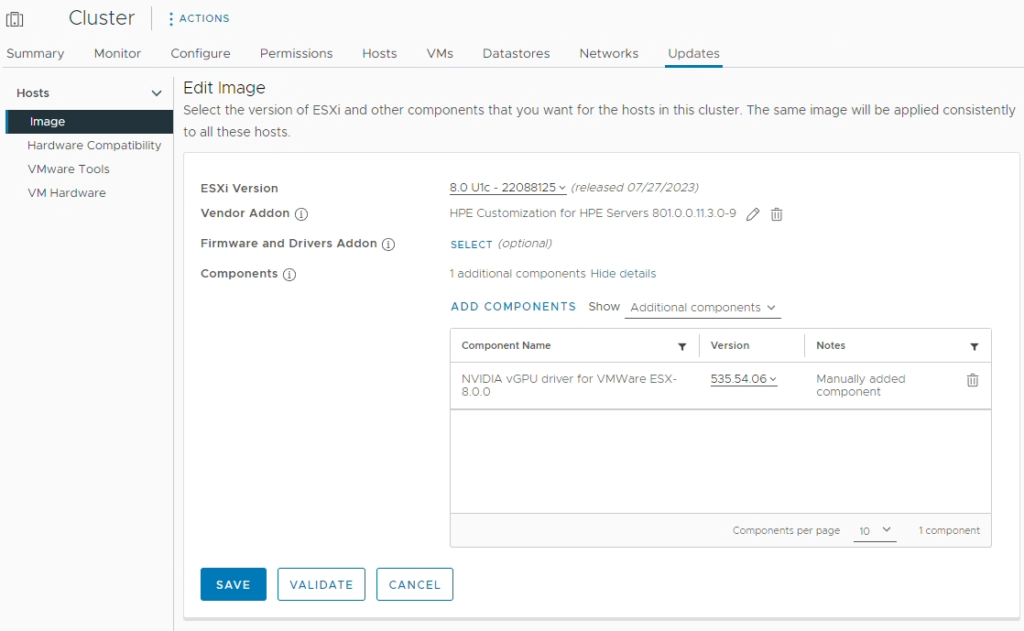

Once LCM has a changed to sync with the updated repos, the driver is then made available to be deployed. You can then deploy using baselines or host images.

In the example above, I added the vGPU 16 (535.54.06) host driver to my clusters update image, which I will then remediate and deploy to all the hosts in that cluster. The vGPU driver was made available from the download using GPU Manager.

very cool, this is how I expected to work, I had installed and configured this a few months ago but have yet to update my vcenter hosts due to other projects.

Hello Sir,

Could you please share the ova file in a cloud? I cannot download it

I am immensely grateful for your time and consideration

Hi Mohammadreza,

Unfortunately I can’t provide the file. You’ll need to log on to the NVIDIA licensing portal and download the deployment files.

Cheers,

Stephen

Nice! The problem I have is when I try to deploy the ova (v1.3), there is no field to input a static ip address, dns, mask etc. It just states it will use static – ipv4. Since it has no ip, cannot access the gui. If I put it on a network it gets a dhcp address, and I can log into the portal and set the password. At this point I can change the ip address to static, but the self signed cert is using the old ip address. The only option is to upload a 3rd part SSL, no way to regenerate the self signed cert with the new ip. Seems like they put the cart before the horse.

Ah, my bad, deep in the user-guide (section 2.11.4 & 2.11.5) are the following to be run from the CLI web console. They completely omitted Step 8 “Customize template” in the later OVA versions:

Set the static ip:

sudo ./set-static-ip-cli.sh

Reset the self-signed cert:

sudo ./reset-ssl-cert.sh

What network connectivity is require from the GPU manager to the VCenter and how can we validate?

The vCenter server has to be able to connect to the GPU manager.

vLCM uses the GPU Manager as an update repository, and the plugin uses web services on the GPU manager presented to the vCenter UI.

Is that what you’re asking for? Or something more specific?