So you want to add NVMe storage capability to your HPE Proliant DL360p Gen8 (or other Proliant Gen8 server) and don’t know where to start? Well, I was in the same situation until recently. However, after much research, a little bit of spending, I now have 8TB of NVMe storage in my HPE DL360p Gen8 Server thanks to the IOCREST IO-PEX40152.

Unsupported you say? Well, there are some of us who like to live life dangerously, there is also those of us with really cool homelabs. I like to think I’m the latter.

PLEASE NOTE: This is not a supported configuration. You’re doing this at your own risk. Also, note that consumer/prosumer NVME SSDs do not have PLP (Power Loss Prevention) technology. You should always use supported configurations and enterprise grade NVME SSDs in production environments.

Update – May 2nd 2021: Make sure you check out my other post where I install the IOCREST IO-PEX40152 in an HPE ML310e Gen8 v2 server for Version 2 of my NVMe Storage Server.

Update – June 21 2022: I’ve received numerous comments, chats, and questions about whether you can boot your server or computer using this method. Please note that this is all dependent on your server/computer, the BIOS/EFI, and capabilities of the system. In my specific scenario, I did not test booting since I was using the NVME drives purely as additional storage.

DISCLAIMER: If you attempt what I did in this post, you are doing it at your own risk. I won’t be held liable for any damages or issues.

NVMe Storage Server – Use Cases

There’s a number of reasons why you’d want to do this. Some of them include:

- Server Storage

- VMware Storage

- VMware vSAN

- Virtualized Storage (SDS as example)

- VDI

- Flash Cache

- Special applications (database, high IO)

Adding NVMe capability

Well, after all that research I mentioned at the beginning of the post, I installed an IOCREST IO-PEX40152 inside of an HPE Proliant DL360p Gen8 to add NVMe capabilities to the server.

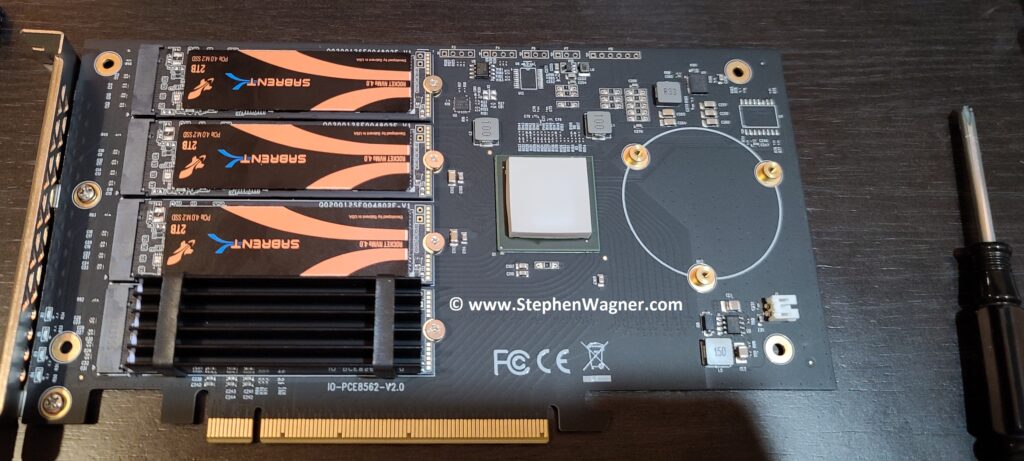

At first I was concerned about dimensions as technically the card did fit, but technically it didn’t. I bought it anyways, along with 4 X 2TB Sabrent Rocket 4 NVMe SSDs.

The end result?

IMPORTANT: Due to the airflow of the server, I highly recommend disconnecting and removing the fan built in to the IO-PEX40152. The DL360p server will create more than enough airflow and could cause the fan to spin up, generate electricity, and damage the card and NVME SSD.

Also, do not attempt to install the case cover, additional modification is required (see below).

The Fit

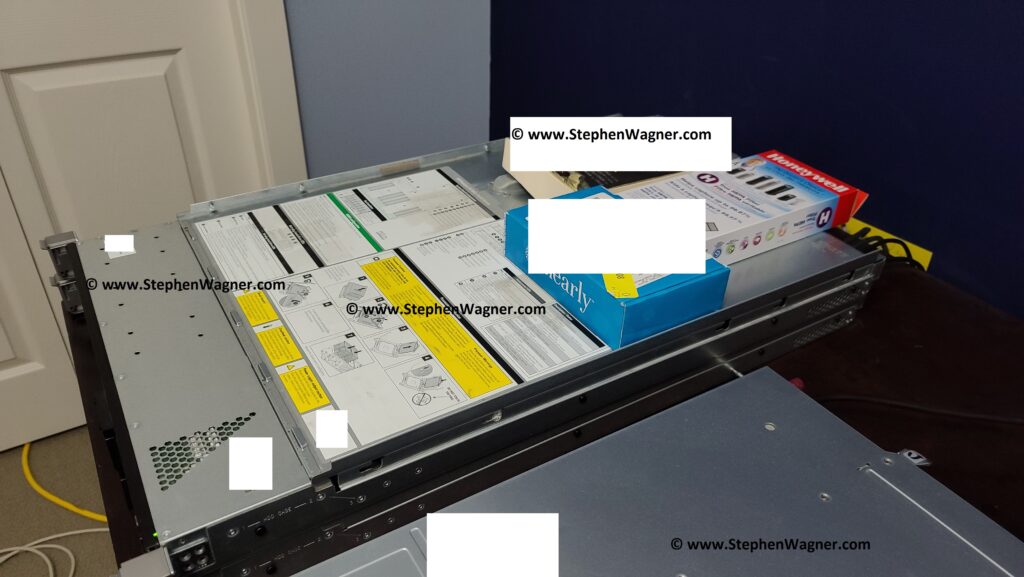

Installing the card inside of the PCIe riser was easy, but snug. The metal heatsink actually comes in to contact with the metal on the PCIe riser.

You’ll notice how the card just barely fits inside of the 1U server. Some effort needs to be put in to get it installed properly.

There are ribbon cables (and plastic fittings) directly where the end of the card goes, so you need to gently push these down and push cables to the side where there’s a small amount of thin room available.

We can’t put the case back on… Yet!

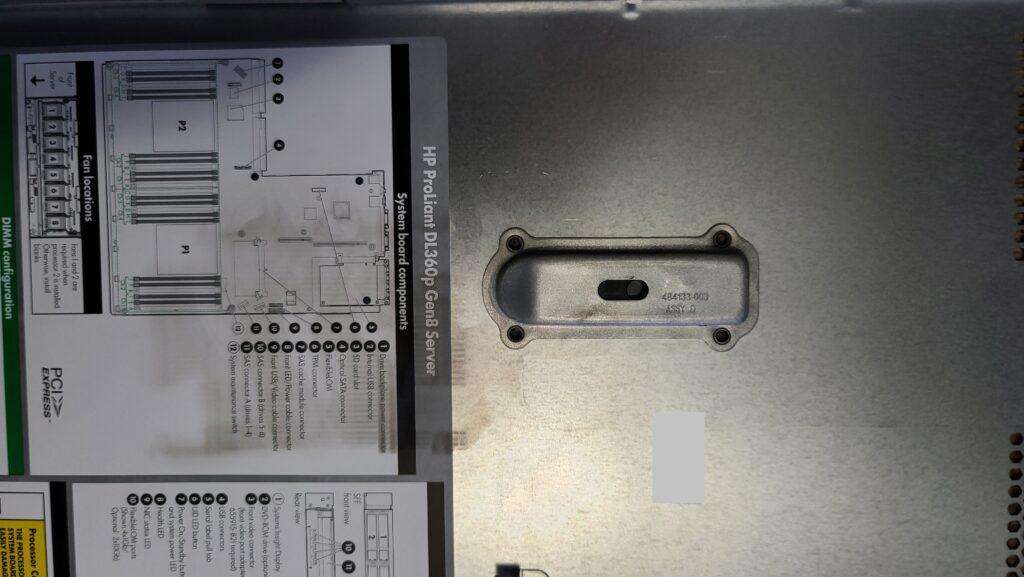

Unfortunately, just when I thought I was in the clear, I realized the case of the server cannot be installed. The metal bracket and locking mechanism on the case cover needs the space where a portion of the heatsink goes. Attempting to install this will cause it to hit the card.

The above photo shows the locking mechanism protruding out of the case cover. This will hit the card (with the IOCREST IO-PEX40152 heatsink installed). If the heatsink is removed, the case might gently touch the card in it’s unlocked and recessed position, but from my measurements clears the card when locked fully and fully closed.

I had to come up with a temporary fix while I figure out what to do. Flip the lid and weight it down.

For stability and other tests, I simply put the case cover on upside down and weighed it down with weights. Cooling is working great and even under high load I haven’t seen the SSD’s go above 38 Celsius.

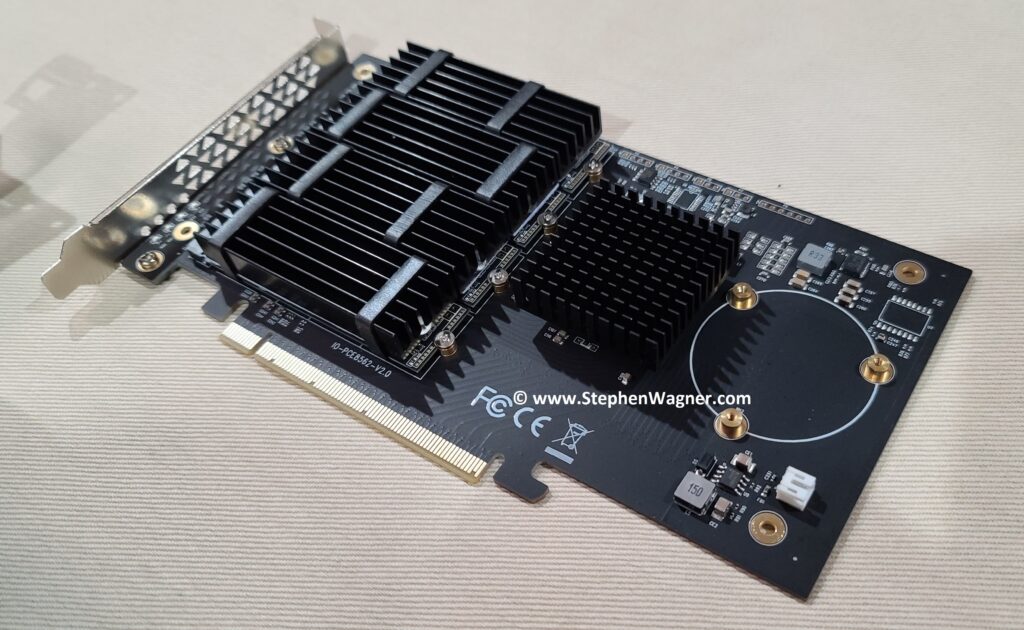

The plan moving forward was to remove the IO-PEX40152 heatsink, and install individual heatsinks on the NVME SSD as well as the PEX PCIe switch chip. This should clear up enough room for the case cover to be installed properly.

The fix

I went on to Amazon and purchased the following items:

4 x GLOTRENDS M.2 NVMe SSD Heatsink for 2280 M.2 SSD

1 x BNTECHGO 4 Pcs 40mm x 40mm x 11mm Black Aluminum Heat Sink Cooling Fin

They arrived within days with Amazon Prime. I started to install them.

And now we install it in the DL360p Gen8 PCIe riser and install it in to the server.

You’ll notice it’s a nice fit! I had to compress some of the heat conductive goo on the PFX chip heatsink as the heatsink was slightly too high by 1/16th of an inch. After doing this it fit nicely.

Also, note the one of the cable/ribbon connectors by the SAS connections. I re-routed on of the cables between the SAS connectors they could be folded and lay under the card instead of pushing straight up in to the end of the card.

As I mentioned above, the locking mechanism on the case cover may come in to contact with the bottom of the IOCREST card when it’s in the unlocked and recessed position. With this setup, do not unlock the case or open the case when the server is running/plugged in as it may short the board. I have confirmed when it’s closed and locked, it clears the card. To avoid “accidents” I may come up with a non-conductive cover for the chips it hits (to the left of the fan connector on the card in the image).

And with that, we’ve closed the case on this project…

One interesting thing to note is that the NVME SSD are running around 4-6 Celsius cooler post-modification with custom heatsinks than with the stock heatsink. I believe this is due to the awesome airflow achieved in the Proliant DL360 servers.

Conclusion

I’ve been running this configuration for 6 days now stress-testing and it’s been working great. With the server running VMware ESXi 6.5 U3, I am able to passthrough the individual NVME SSD to virtual machines. Best of all, installing this card did not cause the fans to spin up which is often the case when using non-HPE PCIe cards.

This is the perfect mod to add NVME storage to your server, or even try out technology like VMware vSAN. I have a number of cool projects coming up using this that I’m excited to share.

[…] In this post I’ll be reviewing the IOCREST IO-PEX40152, providing information on how to buy, benchmarks, installation, configuration and more! I’ve also posted tons of pics for your viewing pleasure. I installed this card in an HPE DL360p Gen8 server to add NVME capabilities. […]

Hello,

This is a great tuto,

I’m in the same situation with, I have installed a NVME PCI card and installed an ESXi on it but on reboot I cannot boot on.

can you please share with me how you did boot from NVME pci card on the DL360P GEN8 ?

Best regards,

Diogène.

Hi Diogene,

I don’t boot off my card, but it should be possible. You just need to set the boot priority on the server to boot which NVMe stick you want.

Cheers

Stephen

Awesome!!! Was just looking for a way to add internal ssd storage to my DL380P Gen8 to free up two 3.5-bays in my unRaid server. If this is compatible for unraid cache I am golden. Thank you for sharing. As much as I love my HP servers in the homelab, they don’t like to pair with non hp components.

Hope the post helped!

That’s really great and that’s what I’m looking for. Looking at the first picture, I can’t exactly see if there is the standard 4 Port Ethernet card there (what are the black cables). You’ve a 2 Port 10Gbit card (HP 560SFP) instead in use? Do I have to remove the 4P ort card, otherwise the Iocrest card may not fit? In the underlined text you’ve mentioned a critical situation which I can’t follow. What exactly is the situation there? What do you mean with “I have confirmed when it’s closed and locked, it clears the card. To avoid accidents I may come up with a non-conductive cover…”

I went through the Iocrest documentation, however, dou you know if I can mix Size (TB) and NVME manufacture on the board?

Hi itarch,

In the first image, I have two servers stacked. The black cables are SFP+ DAC cables going to the server below the server that’s being discussed in this post.

As for your 2nd question, due to the size of the card and the heatsink that ships with it, the heatsink get’s in the way of the mechanics of the case. With the standard IOCREST heatsink installed, you cannot close the case as it’s in the way of the locking pin. Without the heatsink, it may come in contact with the board, so I’d recommend powering off the server when opening or closing the case to avoid it rubbing against the card.

The card is simply a PCIe card with a PCIe switch chip. You can use any mix of size and manufacturer you’d like.

Cheers,

Stephen

Hi Stephen,

First, I would like to thank you for the post (and of course for your feedback) and of course wish you Merry Christmas. In my first comment, very focused on the technical stuff, I forgot to appreciate your effort, sorry for that.

Yes, I took a look inside the server (DL360p Gen8) and understand now what you mean (Pic 5, the locking pin). And even after modifications there is still potential danger to scratch the IOcrest board when opening the case…

Now, I’m googling around to find this board and the only option is Aliexpress, where I’ve never bought before and have to overcome now …

Cheers,

Itarch

Hi itarch,

I’m glad if the post helps! Merry Christmas!!!

As for purchasing the card, I also purchased it from Aliexpress. If you use the link in my other post, it goes directly to the IOCREST genuine store. It’s safe, painless, and I received the card very quickly.

Cheers,

Stephen

Hello! I have a problem. Installed NVMe m.2 samsung 970 evo plus via pci adapter. But the server doesn’t see the pci. In iLo4, there is nothing on the pci slot. Do you know how to fix the situation? Don’t scold. Translated by Google.

Hi eres,

The IOCREST card should not be seen inside of iLO. The PCI card simply has a PCIe switch chip. This is normal and expected behaviour.

Cheers,

Stephen

Hello Stephen,

Great article, exactly what I was hoping to find. Did you run any benchmarks on the card that you can share?

Hi Stefanos,

Inside of the post, there’s a few links to other posts discussing the card and NVMe drives. I believe there should be some benchmarks on those posts. In all cases, the limiting factor for me was the server hardware (CPU), lol. I haven’t been able to max out the NVMe drives or the card as of yet.

Cheers,

Stephen

Hi Stephen,

great article…but you should also describe the basic enviroment (bios version and configuration )

I’m trying to do the same with a single nvme (samsung 970) and a pcie adapter (ATLANTIS LAND A06) but I cannot understand how to set the SDD as boot drive because It doesn’t appear into the bios, I’ve already tryied any pcie slot….Any suggestion please?

Thanks Carette,

On older systems you should expect that you won’t be able to boot off an NVME drive. You might be able off of one, but with this NVMe card you won’t be able to if you’re using software RAID unless you have a different source to boot.

Cheers,

Stepehn

[…] Just to start off, I want to post a screenshot of a few previous benchmarks I compiled when testing and reviewing the Sabrent Rocket 4 NVMe SSD disks installed in my HPE DL360p Gen8 Server and passed through to a VM (Add NVMe capability to an HPE Proliant DL360p Gen8 Server). […]

Hey all and hey Stephen!

Just to add to Stephen’s great write-up. I can confirm the IO-PEX40152 and the IO-PEX40129 both work in DL380p G8 and DL380 G9 machines. I also can confirm the SI-PEX40152 and SI-PEX40129 (Syba branded / US based IO distributor on Amazon) works as well. I can also say that the StarTech.com does as well. I’ve tested a few others and can confirm Switchtec PFX-L 32xG3 and ASMedia’s ASM2824 chipsets for bifurcation work on these devices, so I would imagine other cards (Asus Hyper, etc) work as long as they have these chipsets (might be more, but those are only two I’ve checked personally).

The one thing to ABSOLUTELY sure on is if the storage does NOT appear in ESXi, or in my case, booting to Ubuntu live and doing a GPARTED or lsblk via terminal, then don’t sit and try to troubleshoot why its not showing up. The cards work great IF the NVME’s are working. If the slots don’t populate, get a different M.2 / NVME drive. I went through 2-3 off the shelf Amazon offerings and they didn’t show up, but as soon as I switched to Sabrent or Samsung, the drives started showing. I don’t know if some of the cheaper NVME’s don’t recognize, or maybe they are junk (they didn’t even show up on my gaming motherboard in Windows).

I can also confirm on DL380 G9’s that with the latest SPP (2020 or 2021 SPPs) that the M.2 NVME to PCIe 3.0 adapters you find on Amazon work directly. Granted you only get 1 per slot, if you want to save a few bucks, they will work! One box I have is running 2x of these (your mileage may very): https://www.amazon.com/gp/product/B07JJTVGZM

Thanks again Stephen for doing a ton of the legwork on this, definitely a great resource!

Hey Jon,

Thanks for taking the time to leave a comment and for that excellent write up on the other cards and alternatives! 🙂 It’s much appreciated and I’m sure it’ll help a ton of other people!

It’s interesting how many times I’ve been contacting with issues regarding bad NVMe drives. I’m wondering if there’s a big batch of faulty ones, or if this might be drives used for CHIA mining that are circulating after being used.

Either way, I’m glad you’re up and running! Feel free to keep us updated with what you’re working on!

Cheers,

Stephen

Hi guys!

So is it confirmed that possible to boot from this pcie – ssd expansion card from a hp DL 380 G8?

I have the same server! it looks great, instead of that I’m adding a GPU, I just wondered, why not removing the lock from the cover?

Before I start purchasing anything. Would it be possible to boot from the PCI-E NVME from a HPE DL 380p Gen8?

Hi Ninja,

I’m not sure. I didn’t test it, but one of the NVME did show up on the boot order list (if I remember correctly). However I’d recommend against booting even if it does work.

If there’s is a chance to boot from nvme and you do not recommend to boot from nvme then where is point to install nvme. And if we can’t use nvme as boot then they are useless to keep them just a storage. It’s like waste of time and money to increase performance for server. I use SSD on my server to get better performance. And all backup are stored on HDD.

Hi Shadow,

Typically in servers the OS or hypervisor is loaded on to a small drive, USB key, or flash stick.

The storage (and data) is usually separate and loaded on to high performance media (such as Flash, NVMe, SSD).

This is why we install the NVMe in to servers and don’t necessarily care about booting from it, as it’s usually incorporated in to the design.

Cheers,

Stephen

Hi Stephen Wagner

Your training is very useful. Thank you

I have two questions? 1- Is it possible to replace the heatsink with the following model because there does not seem to be enough space from the top and sides to be able to use all 4 slots

https://www.amazon.com/dp/B07KDDKDNN?th=1&psc=1

2- Is it possible to use RAID in this model and method or not?

Thanks again

Hi Milad,

I’m not sure about what you mean with space, but if you do choose to remove the PCIe Card heatsink, and use your own, you’ll also need to find a way to cool the card.

If you’re installing this in a normal computer, just use the factory PCIe card heatsink and cooling as it works great. I only had to use my own custom solution because of the specific server I was using.

To use RAID, you’ll need to utilize a software RAID solution. The PCIe card only provides passthrough.

Cheers,

Stephen

Hi im tried use HP Z Turbo Drive Quad Pro with two Samsung 1TB 980 PCIe 3.0 x4 M.2 nvme drives, but I can’t get the OS to recognize the hard drives. should something be activated in the bios? or any special recommendation

Hi Ruben,

Sorry but I’m unfamiliar with the HP Z Turbo Drive. I’d recommend checking their documentation as it might have the answers you’re looking for.

Cheers,

Stephen

NVMe NOT showing up after upgrading from ESXi 6.5U2 may be due to consumer drives not supporting NVMe protocol 1.3 (ie. not on the VMware HCL). I personally replaced the NVMe driver in U3 with the one from U2. My procedure was: https://williamlam.com/2019/05/quick-tip-crucial-nvme-ssd-not-recognized-by-esxi-6-7.html#comment-58645

NVMe Protocol

Samsung 970 EVO 500Gb 1.3

Seagate 510 1Tb 1.3

Intel 660p 1Tb No

NB Technically, the 660p supports 1.3 but only appeared in ESXi after 1.2 driver downgrade

Hope this saves someone from any trial & error NVMe buying

Just about to take delivery of a similarly laid out Asus Hyper M.2 4-port V2 card. Very interested to hear that your manual heatsinks + fan removal produced lower NVMe temperatures, I will be doing the same (ie. not using the included large heatsink or fan). I noticed that one of my NVMe drives (a Seagate Barracuda 510 1Tb), when it reached it’s temperature threshold of 75 deg (after a few minutes of continual use), would cause ESXi to DETACH the storage. Instead of simply throttling it’s performance. The remaining 2 NVMe drives behaved correctly

Monitoring NVMe drive temperature thru the ESXi shell (NB insert your own drive ID)

esxcli storage core device list | grep NVMe

watch -n 10 “esxcli storage core device smart get -d t10.NVMe____Seagate_BarraCuda_510_SSD_ZP1000CM30001_9315006001CF2400”

Hello, I came upon your post after being very disappointed with a similar attempt. I’m running a DL385p Gen8 and installed a StarTech M.2 SATA SSD controller with a Samsung 860EVO chip.

The server is in a “quiet” room so all configuration was done with ILO. I configured the BIOS, it recognized the boot drive and installed OS without any problems. That night I went downstairs and heard the noise. OMG … the fan speeds went from 20% normal to 40% constant. I tried moving the card through all the slots but no change. According to HPE, these M.2 cards are not supported in pre GEN9 systems. There are a number of posts where folks are hacking the power management systems/fan controls – I am fearful of bricking the system with that approach.

Did you not have any fan issues with the card you used? Would you recommend I swap out to that card as it might solve the fan issue?

Hi David,

Thanks for the comment! Technically StarTech cards aren’t supported at all. For a “supported” configuration it needs to be all HPE SKUs that are supported in the Quickspecs.

Thankfully I didn’t have any fan issues. Before the card was installed, my servers were running at 42-52% fan speeds and after installing the IOCREST card the servers continue to run at those fan speeds.

What do you mean by issues? Are the fans jumping to 100%?

Cheers,

Stephen

For sure, anything supported has to be HPE but support says M.2 not supported in pre-GEN9. But all manner of people are using 3rd party M.2 Sata in these older machines – like you. My issue is that normal fan speed for me even with all 8 SAS drives installed as RAID is 20%. The minute I install this M.2 controller, they fans now run at 40% and stay there. In my opinion running a constant 40% is too high – and it certainly is LOUD! I don’t have a problem with buying another card, IOCREST, ASUS, whatever, I was just asking your experience with the fans.

Hi David,

What HPE is saying with the “support says M.2 not supported in pre-GEN9” just means they didn’t have HPE branded M.2 SSD capabilities on previous generations for certified configurations.

As for the fan speed, these are dynamically controlled and a number of factors play in to the speed. These include: Number of PCIe Devices, redundancy kids, PSUs, CPU model, RAM configuration, and numerous other factors. 40% is a normal value and I have customers who have these servers running at much higher which is also considered normal.

Unfortunately, these servers aren’t designed to be quiet so if you’re running at 20% it was due to the factors above and luck that it was within your acceptable range unfortunately. Some people are riding so close to the next interval that just by adding another 2 DIMMs can cause the fans to increase.

Cheers,

Stephen

Hi Stephen,

Great article by the way.

Wasn’t able to source the exact card so purchased the IOcrest SI-PEX40129 with a Samsung 970 2TB EVO plus NVME.

Server boots, can see the drive but freezes and crashes when trying to access it. ILO logs show a CPU issue? Have you or anyone else come across this?

Many thanks

Jeremy

Hi Jeremy,

Thank you! 🙂

As for your issue, I’m sorry but I haven’t seen that, it’s even more odd that it’s reporting a CPU issue.

Can you post the exact log?

Thank you for your reply Stephen and you are correct on all accounts. Had fun taking my wife to a data centre colocation with hundreds of servers with their fans up high – what a noise (and heat!). I concede -I was just concerned that the system considered this new card an alien and reacted aggressively. I was hoping someone would have tried another card and had better luck with fan speed – in a GEN8. But hey, I should be happy with a solution that works (at a reasonable price) . Thank you again.

Hi Stephen,

Operating System failure (Windows bug check, STOP: 0x00000080 (0x00000000004F4454, 0x0000000000000000, 0x0000000000000000, 0x0000000000000000))

Operating System failure (Windows bug check, STOP: 0x00000080 (0x00000000004F4454, 0x0000000000000000, 0x0000000000000000, 0x0000000000000000))

Uncorrectable PCI Express Error (Slot 2, Bus 0, Device 3, Function 0, Error status 0x00000000)

Unrecoverable System Error (NMI) has occurred. System Firmware will log additional details in a separate IML entry if possible

Uncorrectable Machine Check Exception (Board 0, Processor 2, APIC ID 0x00000021, Bank 0x00000003, Status 0xBE000000’00800400, Address 0x00000000’0000E1BB, Misc 0x00000000’00000000)

Uncorrectable Machine Check Exception (Board 0, Processor 2, APIC ID 0x00000020, Bank 0x00000003, Status 0xBE000000’00800400, Address 0x00000000’0000E1BB, Misc 0x00000000’00000000)

Uncorrectable Machine Check Exception (Board 0, Processor 1, APIC ID 0x0000000B, Bank 0x00000003, Status 0xBE000000’00800400, Address 0x00000000’0000E1BB, Misc 0x00000000’00000000)

Uncorrectable Machine Check Exception (Board 0, Processor 1, APIC ID 0x0000000A, Bank 0x00000003, Status 0xBE000000’00800400, Address 0x00000000’0000E1BB, Misc 0x00000000’00000000)

Unrecoverable System Error (NMI) has occurred. System Firmware will log additional details in a separate IML entry if possible

What firmware is your server on?

Many thanks

Jeremy

Hi Jeremy,

I’m wondering if the PCIe card or one of the NVME might be faulty. Have you tried other cards or other configurations?

My firmware is on the latest version (the Spectre/Meltdown release version).

hello . i want to know that you meet what speed for r&w . means do you meet the real speed of m2 ? like 3000mbps or less . thanks

Hi Hamed,

You can find benechmark numbers here: https://www.stephenwagner.com/2021/05/01/nvme-storage-server-project/.

Cheers,

Stephen

Great write up, thanks for sharing.

I have two DL360 Gen9 with each 4x 3.5″ drive bays.

I am looking for a way to have these two machines boot from other media than the normal HDD.

I have PCIe card with quad msata (ssd) cards but am unable to boot from them.

Installing an OS, like FreeBSD or Linux is no issue, that works like a charm but then it fails.

Does your installation support booting up from these nvme card?

does it boot using BIOS or UEFI?

Can you advice on the Gen8 vs. the Gen9 since I own Gen9…

tnx in advance

Hi Fred,

Being able to boot off peripheral cards is based off the host system and the peripheral.

I’ve heard of issues with msata cards, but you may also experience difficulty with NVME cards as well depending on how the host handles it.

The Gen9 is newer, and most likely able to offer better compatibility than the older Gen8.

Cheers,

Stephen

Thank you for your response.

i am quite willing to buy a different add-on card with other boot media, to make sure the machine boots from this other media, like nvme.

Does your machine boot from nvme?

I’m not sure. It shows up as a boot option, but I don’t boot from NVME.

I use it for high performance storage, so I wouldn’t use it for booting, etc…

showing up as boot option is a plus. 🙂

i am thinking of creating a USB boot-only device, that simply points to a root filesystem on another device…

Keep you posted

Hi Fred,

That is another option that would work great with no issues!

Cheers,

Stephen

This came up when I was looking to install an NVMe to my 385pg7 and helped with the install and selection of hardware.

I cannot get this card to “Passthru” with ESXi 6.7. It only seems to work with 6.5. Do you have any tips? I used the Gen9 image of 6.7 to upgrade and everything went great until I tried to use the card.

Where are you getting held up?

When you go to perform the passthrough, do the NVME and PCIe switch appear in the list of PCIe devices?

Does ESXi show the disks inside of it’s hardware list before passthrough is enabled?

I turn on Passthru and after rebooting, The 4 nvme devices go back to DISABLED. No matter how many time I do this, the NVMe’s go back to DISABLED after rebooting

Thanks for your trick.

I found “AORUS RAID SSD 2TB” looks like IOCREST, but with PCIe x8 interface. So it can be plugged into PCIe x8 port insted of x16 without the case cover problem.

But I’m worry about its placement. Because its larger then IOCREST. It’s 265.25 mm x 120.04 mm

Hi Simon,

The PCIe slot length didn’t effect the issue of closing the case, this was due to the physical size of the card as well as the heatsink.

The IOCREST card barely fit in the server (millimeters to spare), so if you have a larger card, it is unlikely to fit.

Cheers,

Stephen

How did you set PCIe bifurcation, only the first ssd works for me

Hi CronO89, the card does not use/require bifurcation as it has a switch chip. The DL360p Gen8 doesn’t support bifurcation, which is why I chose that specific NVME PCIe card. You shouldn’t need bifurcation.

Hi,

Did anyone managed to boot from NVME PCIe on a Gen8 HP Dl380p?

Many thanks!

I have DL380p G8. So rack 2U version of your DL360 🙂 I intend to test such PCIe NVMe SSD card:

WD Black AN1500 Gen3 1TB (HHHL version)

, because it has spectacular parameters: 648K IOPS in 4K read, 289K IOPS in 4K write, transfers:6.5GBps read / 4.1 GBps write. But it has got no official Power loss protection (PLP). And no word about Windows Server version drivers support. Only Windows 8.1 and Windows 10.

Can you comment this solution ?

I can’t comment on the drive, however if you use the IOCrest card, make sure the dimensions of the card fit in your server.

@stephen : thanks for your article and experience share it helps as lot.

HI David, I am also using DL385p , connected the ASUS Hyper M2 controller in PCIE raiser, and added 2 Samsung pm983 960gb ssd (m key).

But I am unable to get those ssd drives detected in the Storage (F8) menu to create a logical drive.

Not sure on the ideal BIOS settings (A38 version 2018) for detecting the PCI and M2 cards. Any guidance on bios and photo will help.

thanks

Hi I have a HPE ProLiant ML310e Generation 8 (Gen8) v2 does the bios have to support Bifurcation for this to work? also have you tried proxmox. I had problems running esxi, proxmox has been rock solid got gtx 1650 passthrough to windows 11 vm working great. esxi was always a pain with gpu passthrough worked with older gpus but could never get it working with newer ones.

Hey there, bifurcation is not needed for this card.

Hello, sorry I am a newcomer to the server world and there was a problem when I installed the nvme m2 pcie card, and I filled 4 nvme. when I entered the system, I happened to use proxmox, there were 3 nvme not read. the server I have is an HP 360p gen 9.

how do I overcome so that the 4 nvme can be read, can it be helped?

Hi Joseph,

Are you sure your purchased the correct card? It sounds like you may be using a different one than mentioned in the post. If you have a card that requires bifurcation, this behavior can occur.

Hi, do you think a u.2 ssd will fit in a pci to u.2 card ? or will it be too thick ?

ie one of these adapters U.2 SSD to PCIe X8 Adapter PCIE 4.0 to Dual 2x U.2 SFF-8639 Disk Riser Card

and a u.2 disk, they are thick as a normal 2.5 hdd,

Hi Kristoffer,

Unfortunately I don’t know. I can’t test because I don’t have that hardware.

Hi, great write up. I have a DL360p Gen8 and running into an issue. I only want the drives as storage, not boot. However, with the card inserted the PC will not boot, showing a “non system-disk” error. Note that I do have the P420i in HBA mode. BIOS settings are pointing to the correct boot device.

So, I tested leaving the card in, no NVME drives plugged in and, it boots no issue. But, as soon as drives go in… No system disk error. Since this car does accept hotswap, you can leave drives off, boot and then put drives in but as you can imagine this isn’t ideal haha, but it works.

I also tried the clover route, but that doesn’t work due to onboard graphics not supported (done6 error).

The PCIe car shows in BIOS, and is not first in boot order, but maybe I’m missing something else? Unfortunately, I could not find the HP SSP Gen 8 download either.

Thanks for any help you can provide, really trying to avoid making these servers ewaste. (I have 7 total)

Hi John,

I’m not sure why this is happening. Id recommend checking your RAID controller and your boot order on RBSU.

I use my card only for storage (not booting), and I’ve never had these issues.

Hi, thank you for the quick reply, appreciate it. This is another great article, which may have a way around it by creating boot media through an SD card. (How I got the controller in HBA mode).

https://www.babaei.net/blog/disable-hp-proliant-ml350p-gen8-p420i-raid-controller-enable-hba-mode-pass-through-perform-freebsd-root-zfs-installation/#installing-freebsd

I’ll let you know if this works. Thanks!

Thanks for the great writeup and prompt responses to comments Stephen. I am planning to do basically the same thing but use a DL360 G10 server and a HighPoint SSD7104 RAID with 4 NVMe sticks, and set it up in RAID 10 (stripe of two mirrors) for extra resilience as it will be VMFS storage.

I wanted to ask you, or anyone else reading, a few questions:

1 – Are the G10 series HPE servers more friendly to these PCIe cards and are we sure they wont throw any errors at startup or max out the fans?

2 – Is there is any way to access the BIOS on these cards via iLO at startup? Which HighPoint RAID card supports this?

3 – Will this card fit in the full PCIe Slot and not be too tall or long (8.27″ (W) x 4.41″ (H) x 0.55″ (D))

Thank you.

Hi Steve,

To answer your questions,

1) If you’re using unsupported hardware, you’ll never know if it’ll work or not. However, I think the chances of your config working are higher than not. As for the fans, adding anything to the configuration of a server increases the power/thermal profile, which means it could result in increased fan speeds. In most cases, unsupported hardware will result in the fans increasing.

2) Any PCIe device that has it’s own BIOS should be accessible during boot. You simply have to wait for the prompt. There may be something inside of the HPE RBSU “Allow PCIe ROM (on boot)” which you’ll need enabled.

3) I’m not sure, you’ll need to find out how much room you have inside of the server to determine if you’ll be able to install the card.

Cheers,

Stephen

Thanks Stephen,

After a lot more research I found that none of these PCIe NVMe cards have OPTION ROM’s so there is no ability to access them for configuration during server POST.

HighPoint tech support advised that they do offer the 7500 series AIC RAID controllers with OPTION ROM, and some are made specifically for HP or Dell servers, but even bare they cost twice more than a refurbished G10 server fully loaded 🙂

I actually found in the server options documentation that some HP original NVMe SSD PCIe cards work and some models can be had refurbished for anywhere from $200-$500, so that is looking like the path.

Even tho there is no RAID function, these are enterprise TLC SSD’s made for endurance, will be seen by iLO and one would have diagnostics and remote alerting. They are also VMware certified and even support booting so great for anyone needing that, natively supported. Here is the full list from HPE docs:

“PCIe Workload accelerator options”

HPE 6.4TB NVMe Gen4 x8 High performance MU AIC Half-Height Half-Length (HHHL) PM1735 SSD – P26938-B21

HPE 6.4TB NVMe x8 Lanes MU HHHL 3-year warranty DS firmware card – P10268-B21

HPE 3.2TB NVMe Gen4 x8 High performance MU AICHHHL PM1735 SSD – P26936-B21

HPE 3.2TB NVMe x8 Lanes MU HHHL 3-year warranty DS firmware card – P10266-B21

HPE 1.6TB NVMe Gen4 x8 High performance MU AIC HHHL PM1735 SSD – P26934-B21

HPE 1.6TB NVMe x8 Lanes MU HHHL 3-year warranty DS firmware Card – P10264-B21

HPE 750GB NVMe Gen3 x4 High performance low latency WI AIC HHHL P4800X SSD – 878038-B21

If you have the budget, always best to go “supported” 🙂 Everything just works…