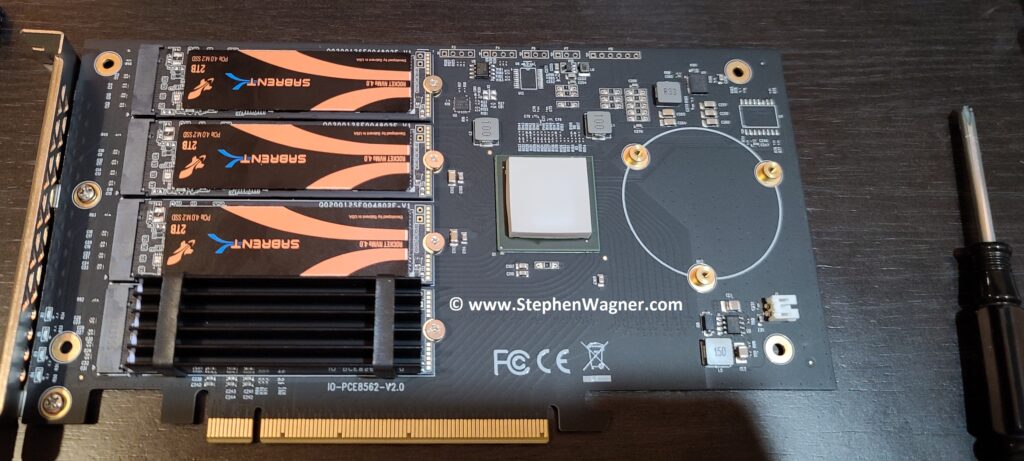

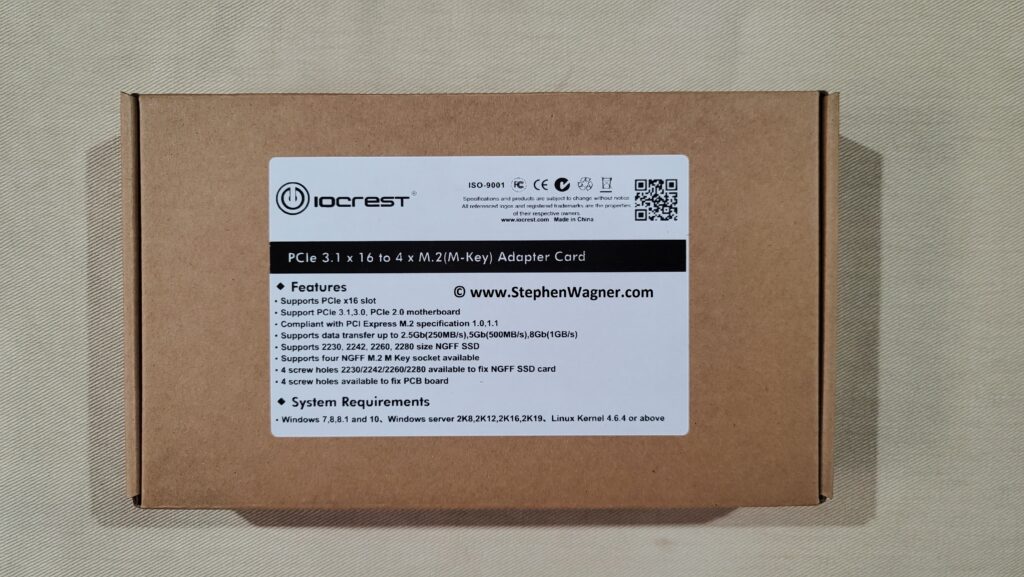

So you want to add NVMe storage capability to your HPE Proliant DL360p Gen8 (or other Proliant Gen8 server) and don’t know where to start? Well, I was in the same situation until recently. However, after much research, a little bit of spending, I now have 8TB of NVMe storage in my HPE DL360p Gen8 Server thanks to the IOCREST IO-PEX40152.

Unsupported you say? Well, there are some of us who like to live life dangerously, there is also those of us with really cool homelabs. I like to think I’m the latter.

PLEASE NOTE: This is not a supported configuration. You’re doing this at your own risk. Also, note that consumer/prosumer NVME SSDs do not have PLP (Power Loss Prevention) technology. You should always use supported configurations and enterprise grade NVME SSDs in production environments.

Update – May 2nd 2021: Make sure you check out my other post where I install the IOCREST IO-PEX40152 in an HPE ML310e Gen8 v2 server for Version 2 of my NVMe Storage Server.

Update – June 21 2022: I’ve received numerous comments, chats, and questions about whether you can boot your server or computer using this method. Please note that this is all dependent on your server/computer, the BIOS/EFI, and capabilities of the system. In my specific scenario, I did not test booting since I was using the NVME drives purely as additional storage.

DISCLAIMER: If you attempt what I did in this post, you are doing it at your own risk. I won’t be held liable for any damages or issues.

NVMe Storage Server – Use Cases

There’s a number of reasons why you’d want to do this. Some of them include:

- Server Storage

- VMware Storage

- VMware vSAN

- Virtualized Storage (SDS as example)

- VDI

- Flash Cache

- Special applications (database, high IO)

Adding NVMe capability

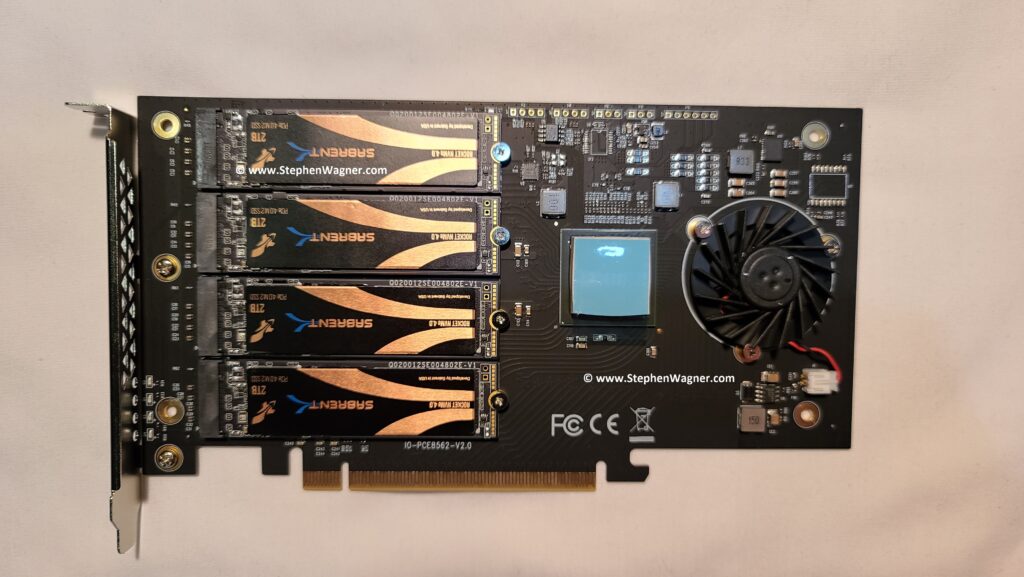

Well, after all that research I mentioned at the beginning of the post, I installed an IOCREST IO-PEX40152 inside of an HPE Proliant DL360p Gen8 to add NVMe capabilities to the server.

At first I was concerned about dimensions as technically the card did fit, but technically it didn’t. I bought it anyways, along with 4 X 2TB Sabrent Rocket 4 NVMe SSDs.

The end result?

IMPORTANT: Due to the airflow of the server, I highly recommend disconnecting and removing the fan built in to the IO-PEX40152. The DL360p server will create more than enough airflow and could cause the fan to spin up, generate electricity, and damage the card and NVME SSD.

Also, do not attempt to install the case cover, additional modification is required (see below).

The Fit

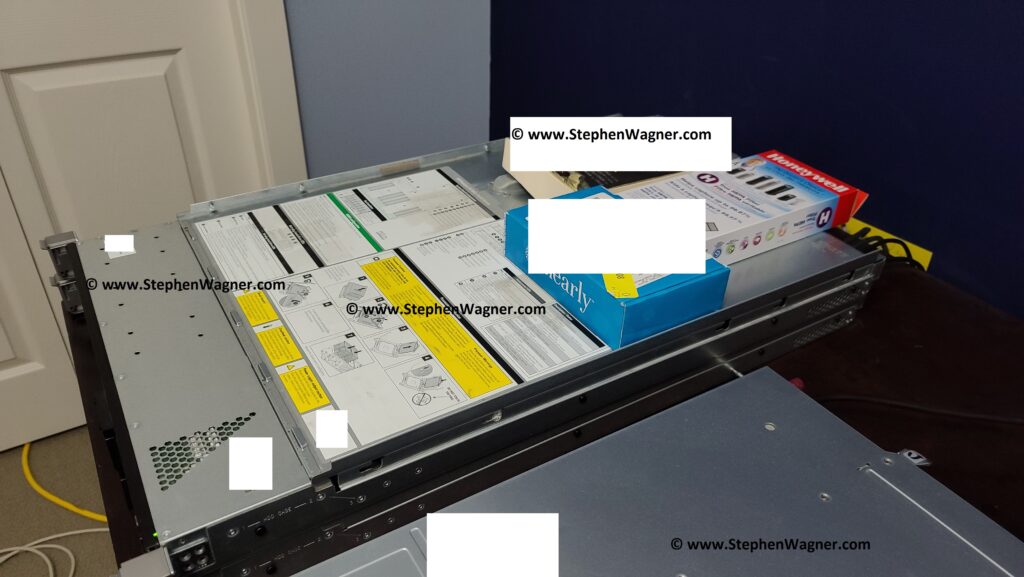

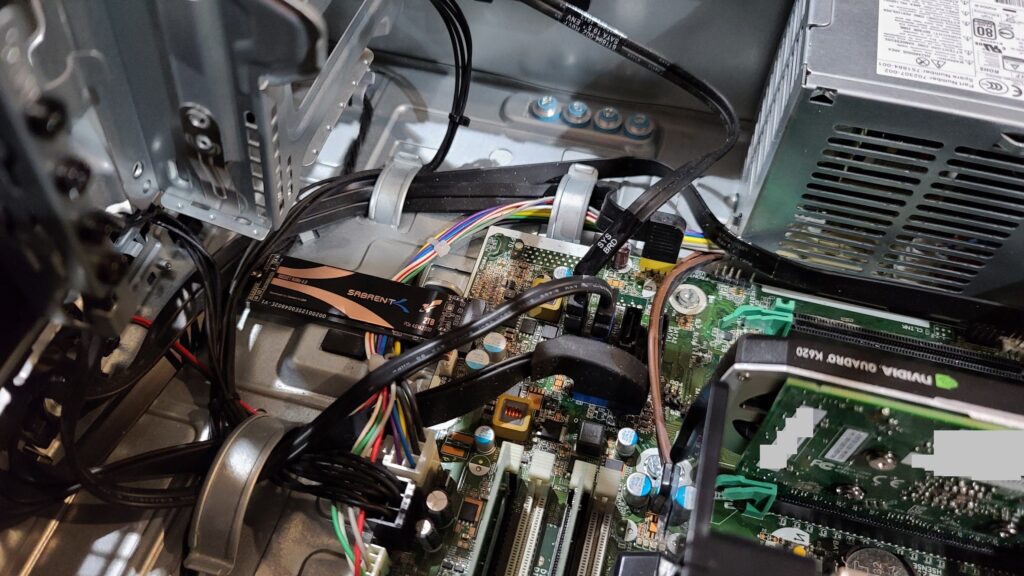

Installing the card inside of the PCIe riser was easy, but snug. The metal heatsink actually comes in to contact with the metal on the PCIe riser.

You’ll notice how the card just barely fits inside of the 1U server. Some effort needs to be put in to get it installed properly.

There are ribbon cables (and plastic fittings) directly where the end of the card goes, so you need to gently push these down and push cables to the side where there’s a small amount of thin room available.

We can’t put the case back on… Yet!

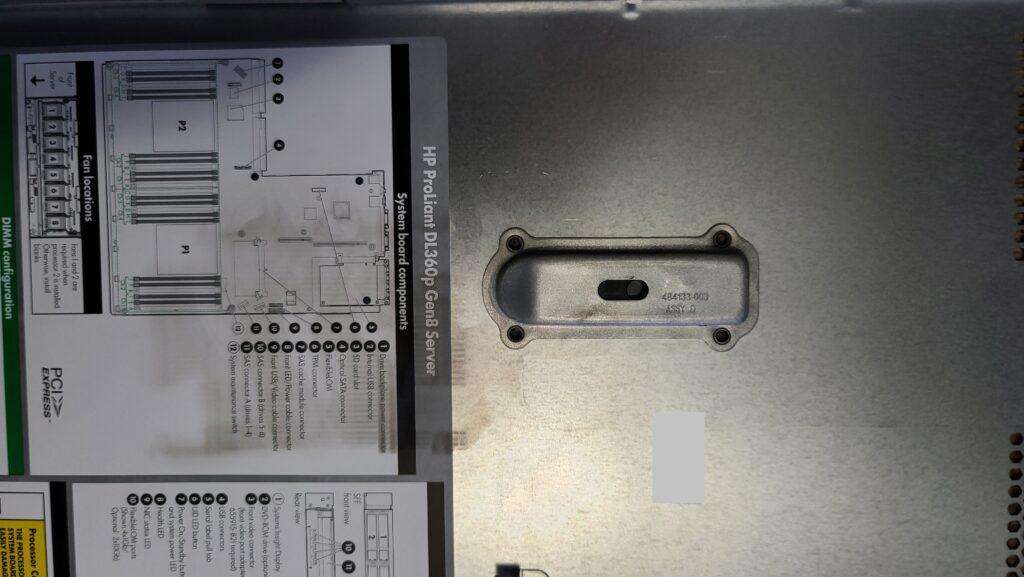

Unfortunately, just when I thought I was in the clear, I realized the case of the server cannot be installed. The metal bracket and locking mechanism on the case cover needs the space where a portion of the heatsink goes. Attempting to install this will cause it to hit the card.

The above photo shows the locking mechanism protruding out of the case cover. This will hit the card (with the IOCREST IO-PEX40152 heatsink installed). If the heatsink is removed, the case might gently touch the card in it’s unlocked and recessed position, but from my measurements clears the card when locked fully and fully closed.

I had to come up with a temporary fix while I figure out what to do. Flip the lid and weight it down.

For stability and other tests, I simply put the case cover on upside down and weighed it down with weights. Cooling is working great and even under high load I haven’t seen the SSD’s go above 38 Celsius.

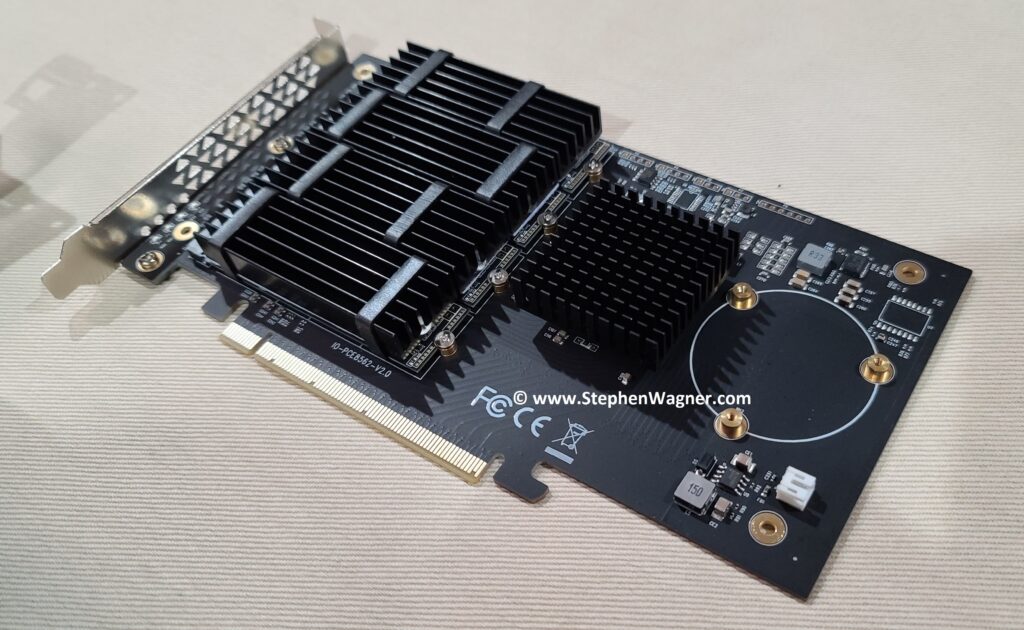

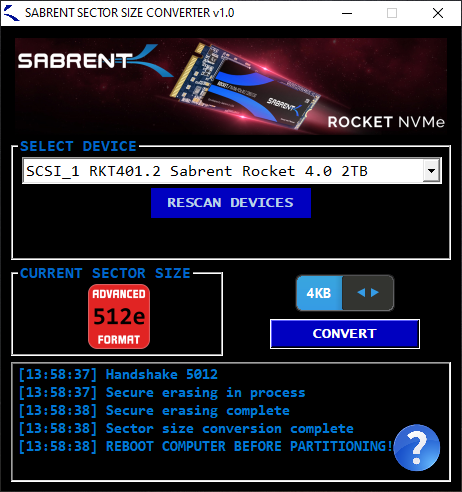

The plan moving forward was to remove the IO-PEX40152 heatsink, and install individual heatsinks on the NVME SSD as well as the PEX PCIe switch chip. This should clear up enough room for the case cover to be installed properly.

The fix

I went on to Amazon and purchased the following items:

4 x GLOTRENDS M.2 NVMe SSD Heatsink for 2280 M.2 SSD

1 x BNTECHGO 4 Pcs 40mm x 40mm x 11mm Black Aluminum Heat Sink Cooling Fin

They arrived within days with Amazon Prime. I started to install them.

And now we install it in the DL360p Gen8 PCIe riser and install it in to the server.

You’ll notice it’s a nice fit! I had to compress some of the heat conductive goo on the PFX chip heatsink as the heatsink was slightly too high by 1/16th of an inch. After doing this it fit nicely.

Also, note the one of the cable/ribbon connectors by the SAS connections. I re-routed on of the cables between the SAS connectors they could be folded and lay under the card instead of pushing straight up in to the end of the card.

As I mentioned above, the locking mechanism on the case cover may come in to contact with the bottom of the IOCREST card when it’s in the unlocked and recessed position. With this setup, do not unlock the case or open the case when the server is running/plugged in as it may short the board. I have confirmed when it’s closed and locked, it clears the card. To avoid “accidents” I may come up with a non-conductive cover for the chips it hits (to the left of the fan connector on the card in the image).

And with that, we’ve closed the case on this project…

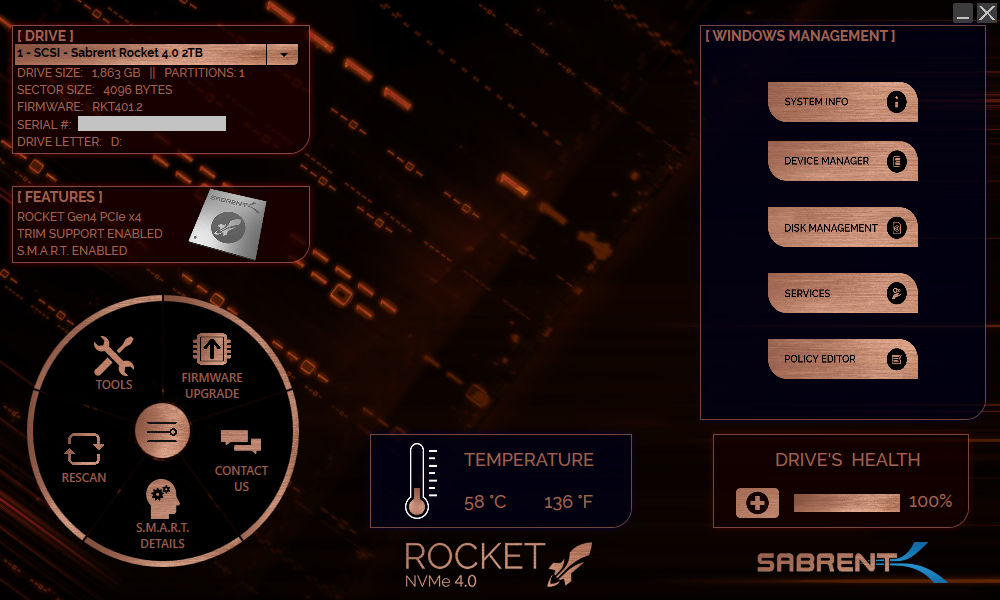

One interesting thing to note is that the NVME SSD are running around 4-6 Celsius cooler post-modification with custom heatsinks than with the stock heatsink. I believe this is due to the awesome airflow achieved in the Proliant DL360 servers.

Conclusion

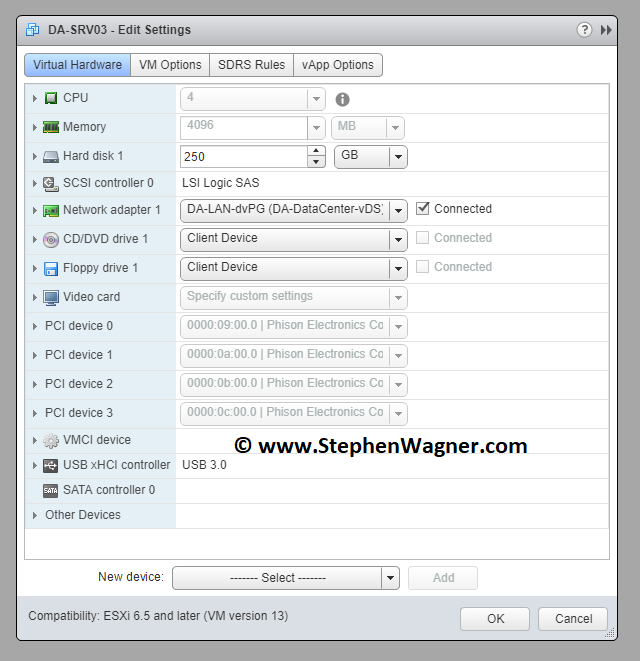

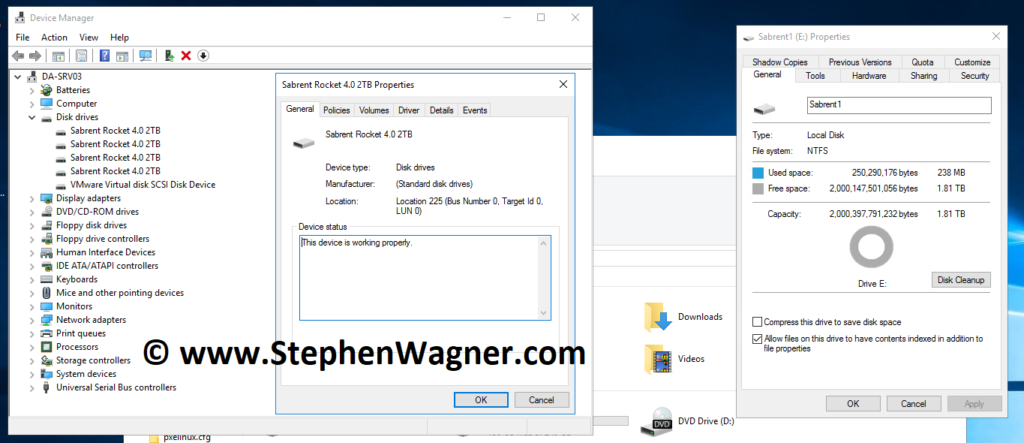

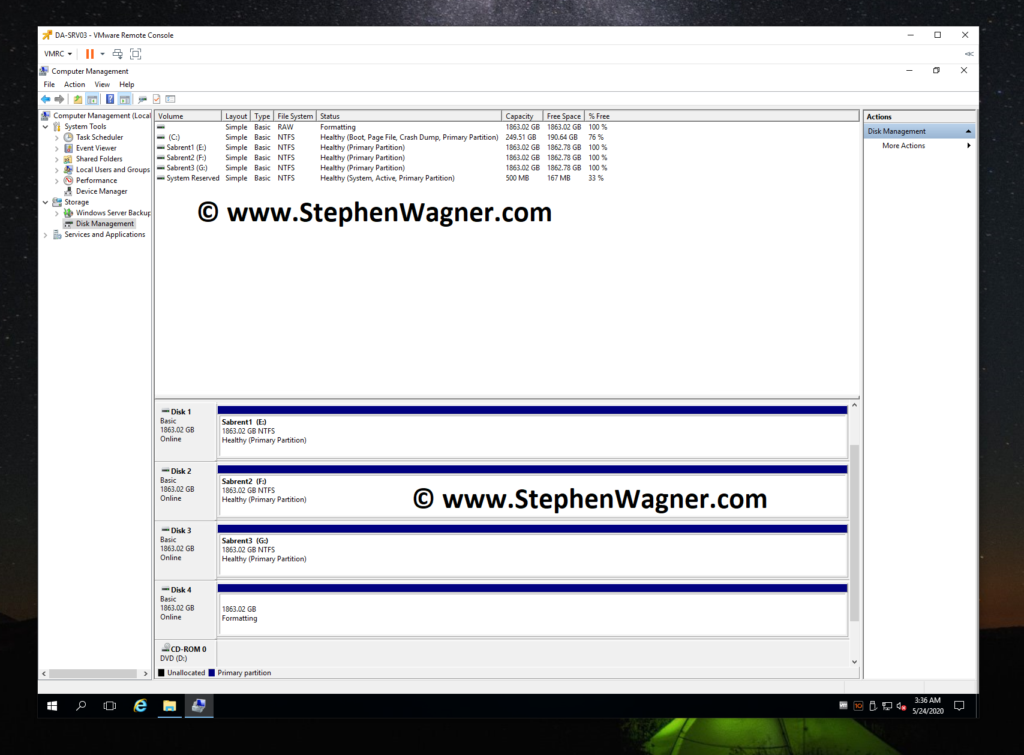

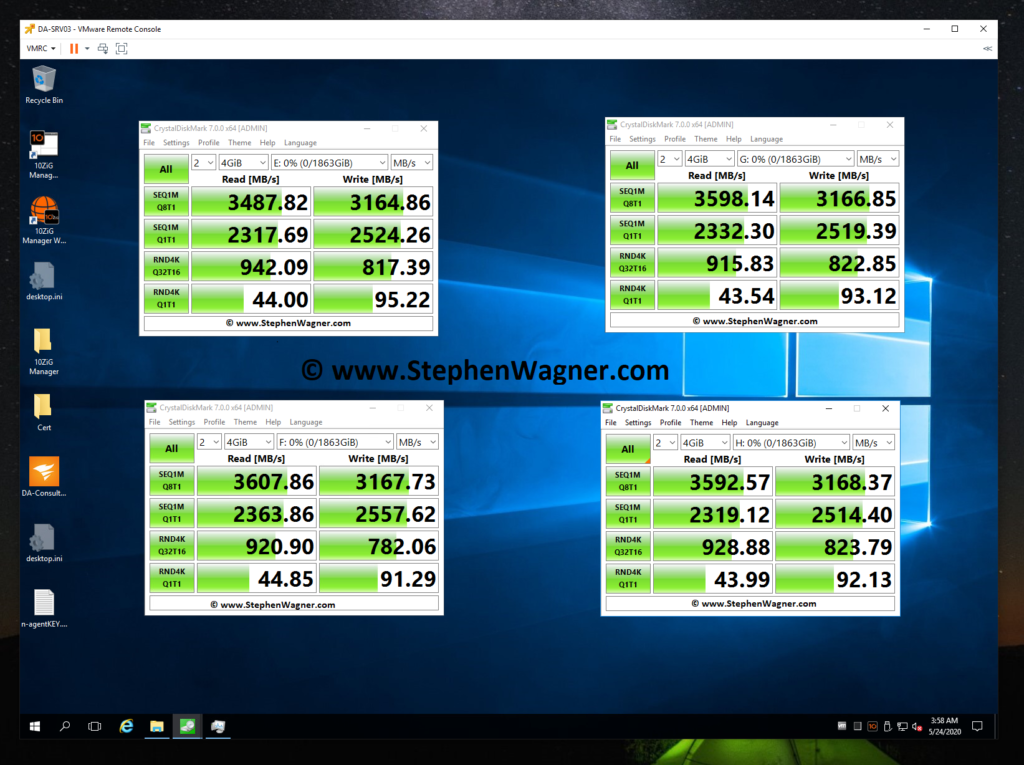

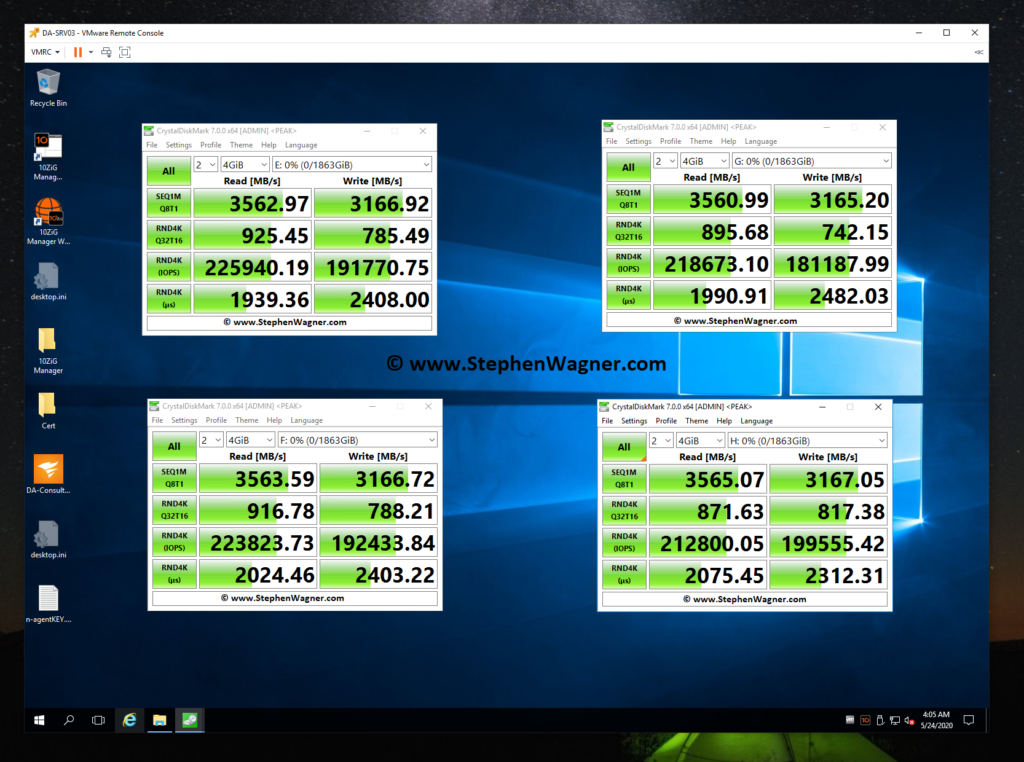

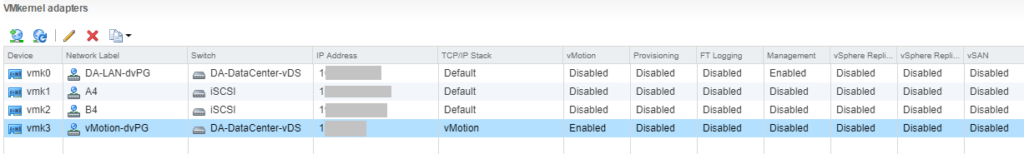

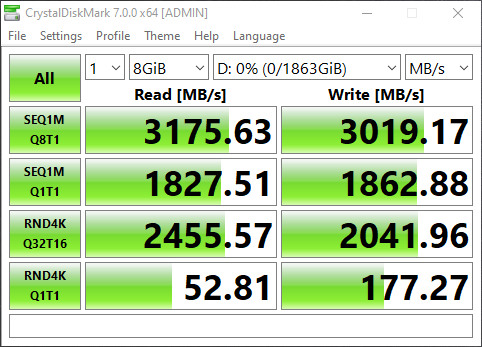

I’ve been running this configuration for 6 days now stress-testing and it’s been working great. With the server running VMware ESXi 6.5 U3, I am able to passthrough the individual NVME SSD to virtual machines. Best of all, installing this card did not cause the fans to spin up which is often the case when using non-HPE PCIe cards.

This is the perfect mod to add NVME storage to your server, or even try out technology like VMware vSAN. I have a number of cool projects coming up using this that I’m excited to share.