I’ve had the HPE MSA 2040 setup, configured, and running for about a week now. Thankfully this weekend I had some time to hit some benchmarks. Let’s take a look at the HPE MSA 2040 benchmarks on read, write, and IOPS.

First some info on the setup:

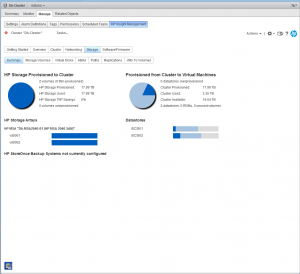

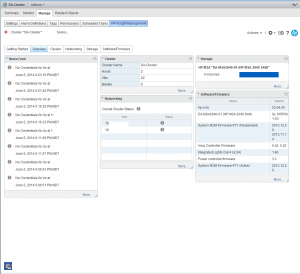

-2 X HPE Proliant DL360p Gen8 Servers (2 X 10 Core processors each, 128GB RAM each)

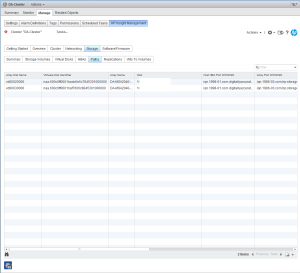

-HPE MSA 2040 Dual Controller – Configured for iSCSI

-HPE MSA 2040 is equipped with 24 X 900GB SAS Dual Port Enterprise Drives

-Each host is directly attached via 2 X 10Gb DAC cables (Each server has 1 DAC cable going to controller A, and Each server has 1 DAC cable going to controller B)

-2 vDisks are configured, each owned by a separate controller

-Disks 1-12 configured as RAID 5 owned by Controller A (512K Chunk Size Set)

-Disks 13-24 configured as RAID 5 owned by Controller B (512K Chunk Size Set)

-While round robin is configured, only one optimized path exists (only one path is being used) for each host to the datastore I tested

-Utilized “VMWare I/O Analyzer” (https://labs.vmware.com/flings/io-analyzer) which uses IOMeter for testing

-Running 2 “VMWare I/O Analyzer” VMs as worker processes. Both workers are testing at the same time, testing the same datastore.

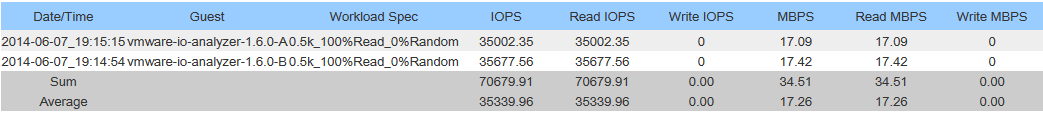

Sequential Read Speed:

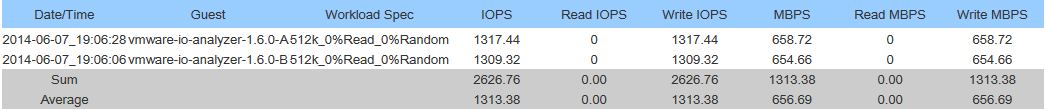

Sequential Write Speed:

See below for IOPS (Max Throughput) testing:

Please note: The MaxIOPS and MaxWriteIOPS workloads were used. These workloads don’t have any randomness, so I’m assuming the cache module answered all the I/O requests, however I could be wrong. Tests were run for 120 seconds. What this means is that this is more of a test of what the controller is capable of handling itself over a single 10Gb link from the controller to the host.

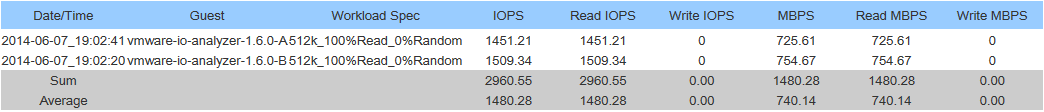

IOPS Read Testing:

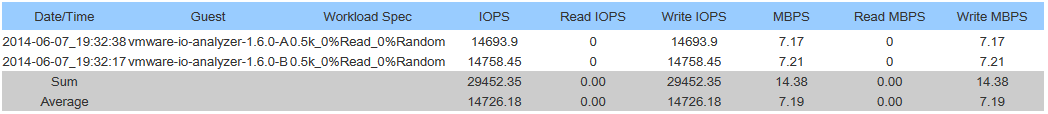

IOPS Write Testing:

PLEASE NOTE:

-These benchmarks were done by 2 seperate worker processes (1 running on each ESXi host) accessing the same datastore.

-I was running a VMWare vDP replication in the background (My bad, I know…).

-Sum is combined throughput of both hosts, Average is per host throughput.

Conclusion:

Holy crap this is fast! I’m betting the speed limit I’m hitting is the 10Gb interface. I need to get some more paths setup to the SAN!

Cheers

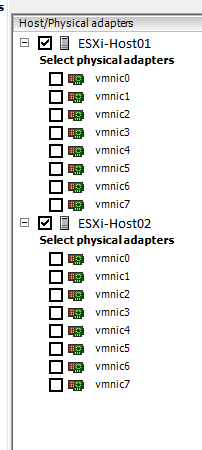

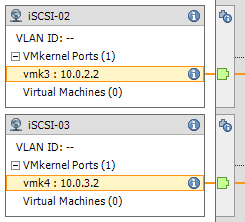

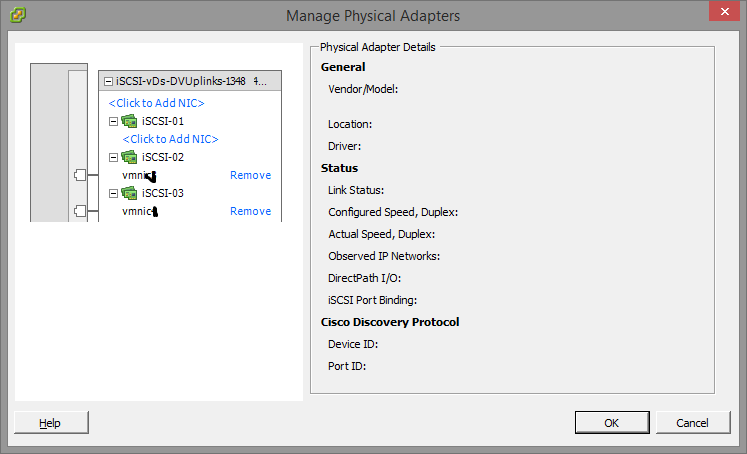

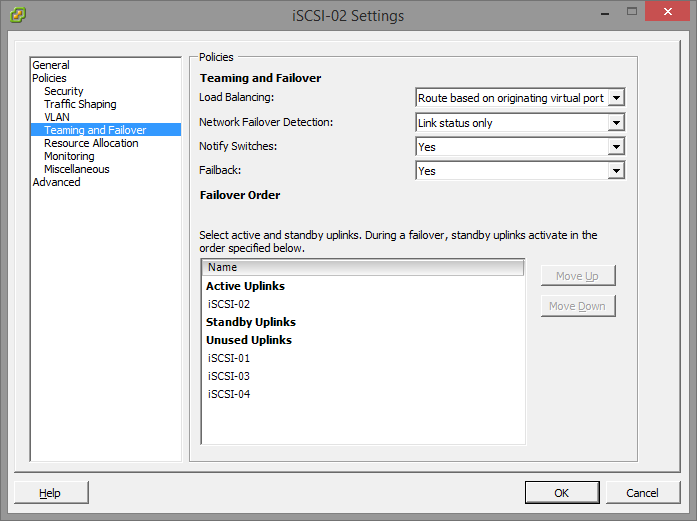

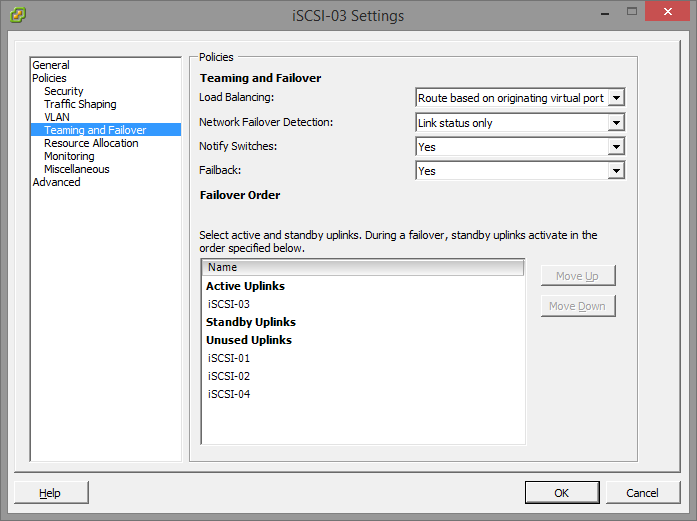

You’ll notice that the iSCSI-02 port group, only has the iSCSI-02 uplink marked as active. Also, the iSCSI-03 port group, only have the iSCSI-03 uplink marked as active. The same applies to iSCSI-01, and any other links you have (more if you have more links). Please ignore the entry for “iSCSI-04”, I created this for something else, pretend the entry isn’t there. If you do have 4 subnets, and 4 NICs, then you would have a 4th port group.

You’ll notice that the iSCSI-02 port group, only has the iSCSI-02 uplink marked as active. Also, the iSCSI-03 port group, only have the iSCSI-03 uplink marked as active. The same applies to iSCSI-01, and any other links you have (more if you have more links). Please ignore the entry for “iSCSI-04”, I created this for something else, pretend the entry isn’t there. If you do have 4 subnets, and 4 NICs, then you would have a 4th port group.