In the last few months, my company (Digitally Accurate Inc.) and our sister company (Wagner Consulting Services), have been working on a number of new cool projects. As a result of this, we needed to purchase more servers, and implement an enterprise grade SAN. This is how we got started with the HPE MSA 2040 SAN (formerly known as the HP MSA 2040 SAN), specifically a fully loaded HPE MSA 2040 Dual Controller SAN unit.

The Purchase

For the server, we purchased another HPE Proliant DL360p Gen8 (with 2 X 10 Core Processors, and 128Gb of RAM, exact same as our existing server), however I won’t be getting that in to this blog post.

Now for storage, we decided to pull the trigger and purchase an HPE MSA 2040 Dual Controller SAN. We purchased it as a CTO (Configure to Order), and loaded it up with 4 X 1Gb iSCSI RJ45 SFP+ modules (there’s a minimum requirement of 1 4-pack SFP), and 24 X HPE 900Gb 2.5inch 10k RPM SAS Dual Port Enterprise drives. Even though we have the 4 1Gb iSCSI modules, we aren’t using them to connect to the SAN. We also placed an order for 4 X 10Gb DAC cables.

To connect the SAN to the servers, we purchased 2 X HPE Dual Port 10Gb Server SFP+ NICs, one for each server. The SAN will connect to each server with 2 X 10Gb DAC cables, one going to Controller A, and one going to Controller B.

HPE MSA 2040 Configuration

I must say that configuration was an absolute breeze. As always, using intelligent provisioning on the DL360p, we had ESXi up and running in seconds with it installed to the on-board 8GB micro-sd card.

I’m completely new to the MSA 2040 SAN and have actually never played with, or configured one. After turning it on, I immediately went to HPE’s website and downloaded the latest firmware for both the drives, and the controllers themselves. It’s a well known fact that to enable iSCSI on the unit, you have to have the controllers running the latest firmware version.

Turning on the unit, I noticed the management NIC on the controllers quickly grabbed an IP from my DHCP server. Logging in, I found the web interface extremely easy to use. Right away I went to the firmware upgrade section, and uploaded the appropriate firmware file for the 24 X 900GB drives. The firmware took seconds to flash. I went ahead and restarted the entire storage unit to make sure that the drives were restarted with the flashed firmware (a proper shutdown of course).

While you can update the controller firmware with the web interface, I chose not to do this as HPE provides a Windows executable that will connect to the management interface and update both controllers. Even though I didn’t have the unit configured yet, it’s a very interesting process that occurs. You can do live controller firmware updates with a Dual Controller MSA 2040 (as in no downtime). The way this works is, the firmware update utility first updates Controller A. If you have a multipath (MPIO) configuration where your hosts are configured to use both controllers, all I/O is passed to the other controller while the firmware update takes place. When it is complete, I/O resumes on that controller and the firmware update then takes place on the other controller. This allows you to do online firmware updates that will result in absolutely ZERO downtime. Very neat! PLEASE REMEMBER, this does not apply to drive firmware updates. When you update the hard drive firmware, there can be ZERO I/O occurring. You’d want to make sure all your connected hosts are offline, and no software connection exists to the SAN.

Anyways, the firmware update completed successfully. Now it was time to configure the unit and start playing. I read through a couple quick documents on where to get started. If I did this right the first time, I wouldn’t have to bother doing it again.

I used the wizards available to first configure the actually storage, and then provisioning and mapping to the hosts. When deploying a SAN, you should always write down and create a map of your Storage area Network topology. It helps when it comes time to configure, and really helps with reducing mistakes in the configuration. I quickly jaunted down the IP configuration for the various ports on each controller, the IPs I was going to assign to the NICs on the servers, and drew out a quick diagram as to how things will connect.

Since the MSA 2040 is a Dual Controller SAN, you want to make sure that each host can at least directly access both controllers. Therefore, in my configuration with a NIC with 2 ports, port 1 on the NIC would connect to a port on controller A of the SAN, while port 2 would connect to controller B on the SAN. When you do this and configure all the software properly (VMWare in my case), you can create a configuration that allows load balancing and fault tolerance. Keep in mind that in the Active/Active design of the MSA 2040, a controller has ownership of their configured vDisk. Most I/O will go through only to the main controller configured for that vDisk, but in the event the controller goes down, it will jump over to the other controller and I/O will proceed uninterrupted until your resolve the fault.

First part, I had to run the configuration wizard and set the various environment settings. This includes time, management port settings, unit names, friendly names, and most importantly host connection settings. I configured all the host ports for iSCSI and set the applicable IP addresses that I created in my SAN topology document in the above paragraph. Although the host ports can sit on the same subnets, it is best practice to use multiple subnets.

Jumping in to the storage provisioning wizard, I decided to create 2 separate RAID 5 arrays. The first array contains disks 1 to 12 (and while I have controller ownership set to auto, it will be assigned to controller A), and the second array contains disk 13 to 24 (again ownership is set to auto, but it will be assigned to controller B). After this, I assigned the LUN numbers, and then mapped the LUNs to all ports on the MSA 2040, ultimately allowing access to both iSCSI targets (and RAID volumes) to any port.

I’m now sitting here thinking “This was too easy”. And it turns out it was just that easy! The RAID volumes started to initialize.

VMware vSphere Configuration

At this point, I jumped on to my vSphere demo environment and configured the vDistributed iSCSI switches. I mapped the various uplinks to the various portgroups, confirmed that there was hardware link connectivity. I jumped in to the software iSCSI imitator, typed in the discovery IP, and BAM! The iSCSI initiator found all available paths, and both RAID disks I configured. Did this for the other host as well, connected to the iSCSI target, formatted the volumes as VMFS and I was done!

I’m still shocked that such a high performance and powerful unit was this easy to configure and get running. I’ve had it running for 24 hours now and have had no problems. This DESTROYS my old storage configuration in performance, thankfully I can keep my old setup for a vDP (VMWare Data Protection) instance.

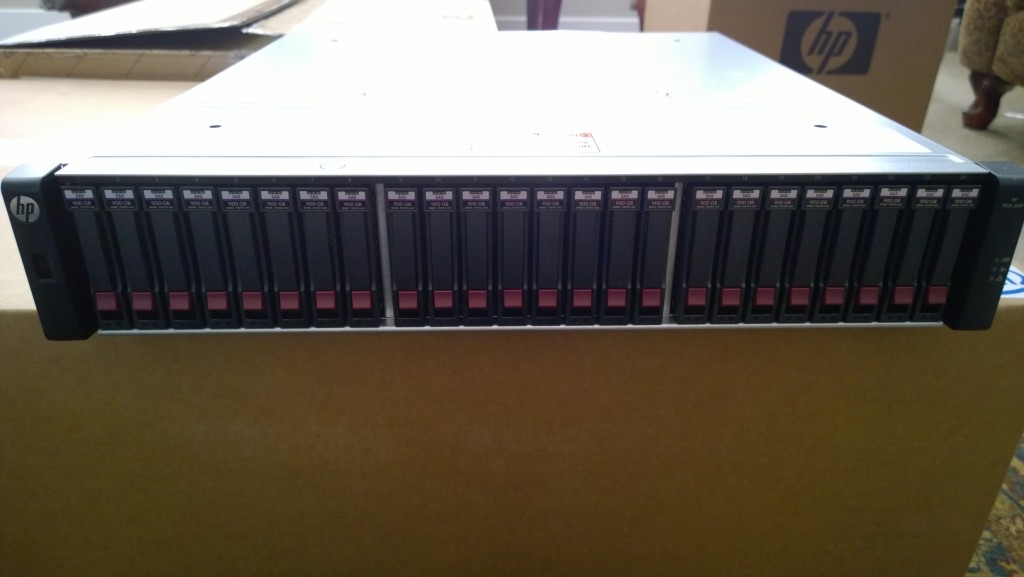

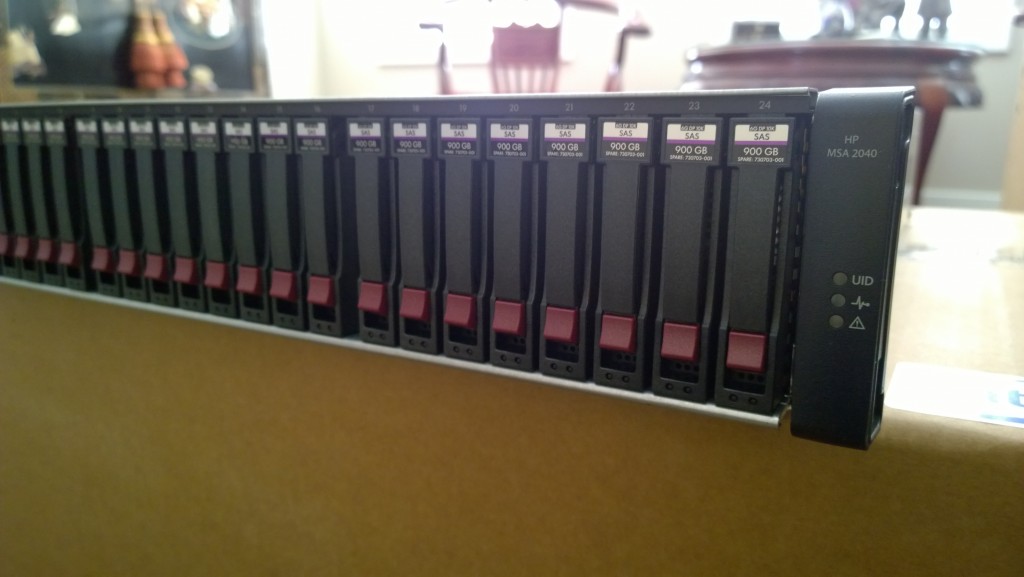

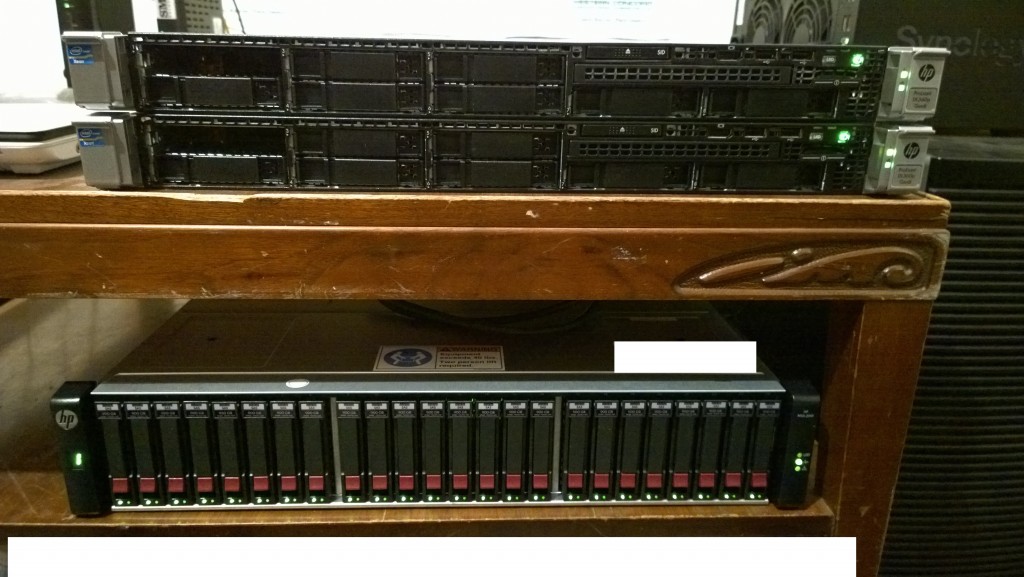

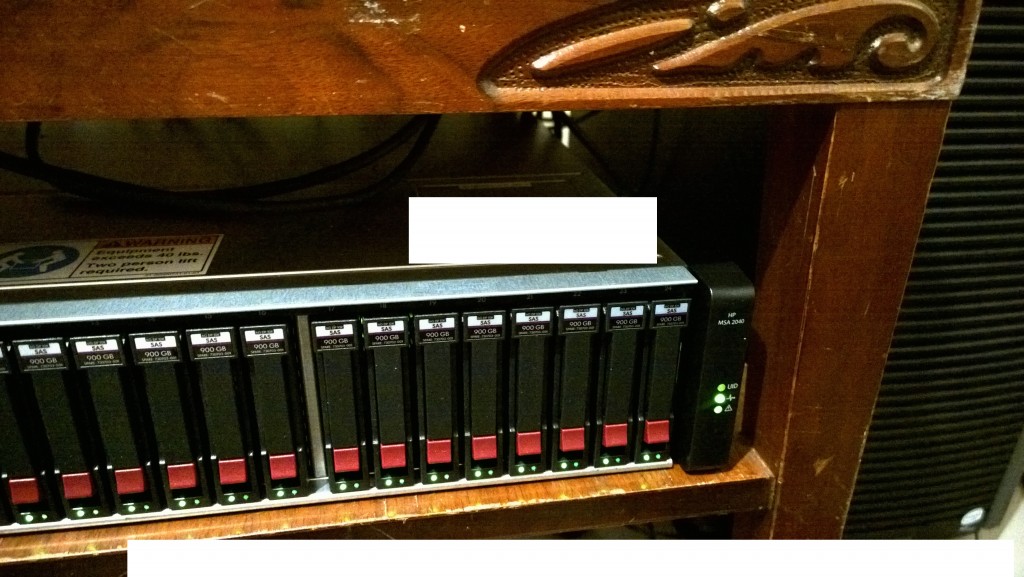

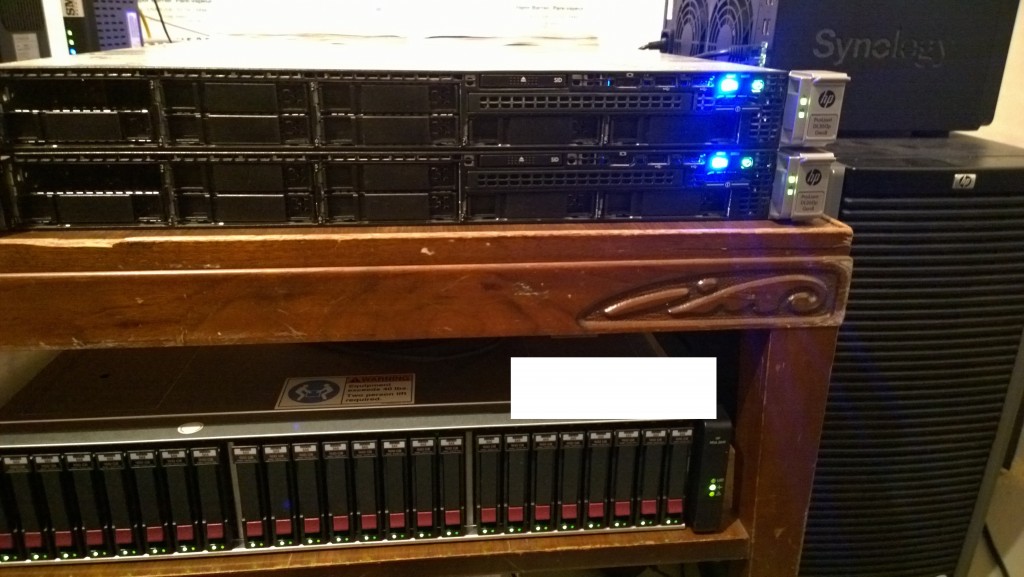

HPE MSA 2040 Pictures

I’ve attached some pics below. I have to apologize for how ghetto the images/setup is. Keep in mind this is a test demo environment for showcasing the technologies and their capabilities.

HP MSA 2040 Dual Controller SAN

HP MSA 2040 Dual Controller SAN

VMWare vSphere

Update: HPE has updated the MSA product line and the 2040 has now been replaced by the HPE MSA 2050 SAN Dual Controller SAN. There are now also SSD Cache models such as the HPE MSA 2052 Dual Controller SAN.

Congrats on the new SAN Stephen. It’s pretty nice, but it better be for ~30K. Yikes!

Do you have any performance numbers?

Ash

Hi Ash,

No performance numbers yet, but let’s just say using the performance monitor on the web interface on the MSA, I’ve seen it hit 1GB/sec!

Copying large files (from and to the same vmdk), are hitting over 400MB/sec which is absolutely insane, especially copying from and to the same location.

During boot storms, there’s absolutely no delays, and the VMs are EXTREMELY responsive even under extremely high load.

In my configuration I only have 2 links from each host to the MSA, and because of LUN ownership only 1 link is optimized (and used), the other is for fail over and redundancy.

We have some demo applications (including a full demo SAP instance) running on it, and it just purrs.

Love it!

I’ll just to get something up and posted soon.

Stephen

Hi, very useful post…

I have decided to create in my lab a new vmware environment with 2x HP DL360p Gen8 (2 CPU with 8 Core, 48Gb RAM, SDHC for ESXi 5.5, 8 NIC 1Gb) + 1 HP MSA 2040 dual controller with 9 600Gb SAS + 8 SFP transceivers 1Gb iSCSI.

I’m planning to configure the MSA with 3 vdisk. 1° vdisk RAID5 with phisical disk 1 to 3, 2° vdisk RAID5 with disk 4 to 6, 3° vdisk RAID1 with disk 7 to 8 and a global spare drive with disk 9.

Then for each vdisk I create one volume with the entire capacity of vdisk and then for each volume, i create one lun per VM. The 3° vdisk i would like to use for the replica on any VM.

For you, my configuration is ok?

Thanks a lot

Bye

Fabio

Hello Fabio,

That should work!

I’m curious about what you mean by: “The 3° vdisk i would like to use for the replica on any VM.” What are you planning on using this for? I just don’t know what you mean by replica.

Keep in mind that with more disks in a RAID volume, the more speed you have. You may be somewhat limited by speed.

Have you thought about creating 2 volumes with 4 disks each, and then 1 global spare? This may increase your performance a bit.

Stephen

Sorry, replica is the replication of any VMs for example with software such as vSpehere Replication or Trilead VM Explorer Pro. With this scenario if any VMs fail, I can use the VM replicated.

It’s a good choice use the 3°vdisk or is best to use a secondary storage?

Hi Fabio,

Thanks for clearing that up. Actually that would work great the way you originally planned it. Just make sure you provision enough storage.

One other thing I want to mention (I found this out after I provisioned everything), if you want to use the Storage snapshot capabilities, you’ll need to leave free space on the RAID volumes. I tried to snap my storage the other day and was unable to.

Let me know if you have any other questions and I’ll do my best to help out! I’m pretty new to the unit myself, but it’s very simple and easy to configure. Super powerful device!

Stephen

Thanks, the software for snapshot is in bundle with the MSA 2040?

Hi Fabio,

Storage snapshot capabilities are built in to the MSA 2040. A standard MSA 2040 supports up to 64 snapshots. An extra additional license can be purchased that allows up to 512 snapshots.

good, very good..

When the MSA will arrive in my lab, I’ll start to play with it….

Thanks a lot

Very interesting article.

This is exactly the setup I was about to order for my company; when local HP folks said that such a setup may not work and I should consider a 10G Ethernet switch for iSCSI traffic. Could you please share a photo that shows the servers directly connected to the MSA through the DAC cable ? That might convince them !

Regards

Hello Satish,

It is indeed a supported configuration. I was extremely nervous too before ordering since there is no mention inside of QuickSpecs or articles on the internet, however since I’m an HP Partner, I have access to internal HP resources to verify configuration, and mention configurations that are indeed supported.

Just so you know I used these Part#s:

665249-B21 – HEWLETT PACKARD : HP Ethernet 10Gb 2-port 560SFP+ Adapter (Server NICs)

487655-B21 – HEWLETT PACKARD : HP BladeSystem c-Class Small Form-Factor Pluggable 3m 10GbE Copper Cable

I know that the cable (the second item) mentions that it is a for BladeSystem, but you can safely ignore that.

Please Note: the MSA2040 does need to be purchased with at least 1-pack of SFP+ modules (due to HP ordering rules). I chose the 4-pack of the 1Gb RJ45 iSCSI SFP+ modules. Note that I have these in the unit, but am NOT using them at all. I have the servers connected only via the DAC cables (each server has 1 DAC cable to Controller A, and 1 DAC cable going to Controller B).

I do have a picture I can send you, let me know if you want me to e-mail you. I can also answer any questions you may have if you want me to e-mail you.

Thanks,

Stephen

Hey Stephen,

Great post. I’ve also got the MSA 2040 with the 900GB SAS drives and connected through dual 12GbE SAS controllers to 2x HP DL380 G8 ESXi-hosts. It’s a fantastic and fast system. Better than the old Dell MD1000 I had 😉

Setup was indeed a breeze. I was doubting to choose the FC or the SAS controllers and I choose for the SAS. I only have 2 ESXi-hosts with VMware Essential plus, so 3 hosts max.

During the datamigration of our old dataserver to the MSA with robocopy, i saw the data copied around 700MB/s (for large files and during the weekend).

Backup will be done with Veeam and going to disk and then to tape. Backup server is connected with a 6GbE SAS hba to the MSA port 4 directly and a LTO-5 tapelibrary is connected to the backup server also with a 6GbE hba.

I’m very pleased with my setup.

Hi Lars,

Glad to hear it’s working out so well for you! I’m still surprised how awesome this SAN is. Literally I set it up (with barely any experience), and it’s just been sitting there, working, with no issues since! Love the performance as well. And nice touch with the LTO-5 tape library. I fell in love with the HP MSL tape libraries back a few years ago, the performance on those are always pretty impressive!

I’m hoping I’ll be rolling these out for clients soon when it’s time to migrate to a virtualized infrastructure!

Cheers,

Stephen

Hi Stephen,

Thanks for sharing. About to purchase the 2040 and this configuration could appeal to us.

I take it you have 2 x 10Gb DAC links per controller (and 2 x unused 1Gb iSCSI SFPs per controller)?

So with the 10Gb links between your hosts and SAN, are vMotion speeds super fast?

Our current SAN backbone is a 4Gb FC and we were planning to go to 6Gb SAS but this may be the better option as SAS will limit us hosts-wise in the future.

Regards,

Steve

Hello Steve,

That is correct (re: the 2 x 10Gb DACs and 2 x unused 1Gb SFP+s)…

The configuration is working great for me and I’m having absolutely no complaints. Being a small business I was worried about spending this much money on this config, but it has exceeded my expectations and has turned out to be a great investment.

For vMotion, each of my servers actually have a dual port 10G-BaseT NIC inside of them. I actually just connected a 3 foot Cat6 cable from server to server and dedicated it to vMotion. vMotion over 10Gb is awesome, it’s INCREDIBLY fast. When doing updates to my hosts, it moves 10-15VMs (each with 6-12GB of RAM allocated to each VM) to the other host in less then 40 seconds for all.

As for Storage vMotion, I have no complaints. It sure as heck beats my old configuration. I don’t have any numbers on storage vMotion, but it is fast!

And to your comment about SAS. Originally I looked at using SAS, but ended up not touching it because of the same issue; I was concerned about adding more hosts in the future. Also, as I’m sure you aware iSCSI creates more compatibility for hosts, connectivity, expansion, etc… In the far far future, I wanted to have the ability to re-purpose this unit when it comes time to performing my own infrastructure refresh.

Keep in mind with the 2040, the only thing that’s going to slow you down, is your disks and RAID level. The controllers can handle SSD drives and SSD speeds, but keep in mind, you want fast disks, you want lots of disks, and you want a fast RAID level.

Let me know if you have any questions!

Cheers,

Stephen

Hi Stephen,

Looking forward to getting my hands on the MSA and getting it configured 🙂

That is seriously fast for vMotion, sounds great.

Yeah, same thinking on iSCSI, I like the fact that we have the option of throwing in a 10GbE switch in the future if we need to scale up and connect more hosts (he says nonchalantly, ignoring the cost!).

And yep, that’s always going to be the bottleneck with mechanical disks isn’t it.

We have an I/O intensive business app with a SQL backend, which is susceptible to slowdowns due to disk I/O being thrashed!

We are a not-for-profit Housing Association though, so sadly, SSD is outwith our budget. 🙁

However, I am going to create 2 separate arrays – 1 x RAID1 and 1 x RAID5. We’ll stick the SQL Server for that app on the RAID1 array to gain the write speed (writing to 2 drives, rather than writing to 5) and the rest on the larger RAID5 array.

Obviously, we’ll lose redundancy on that data but we are prepared for that and will model our backups and DR/BC accordingly.

Thanks for info much appreciated!

Steve

Hi Steve,

Can you share a picture of the rear of the MSA 2040 with the DAC cables to the DL 380 Server?

Thanks

Hi SG,

I don’t have a DL380, but I do have it connected to a DL360. If you’d like I can e-mail you a pic of the connection. Is the e-mail address you used for your blog comment valid? I can’t e-mail it to that address.

Stephen

Hi Steve,

Great blog and detailed write up on the MSA. We are a SMB consulting business, and looking to acquire a MSA 2040 for our PoC lab. From your experience, it seems that this unit is pretty snappy.

Could you kindly share that picture of the MSA and server directly connected to each other ?

Thanks a lot.

Hi Babar,

It’s running great! I’m actually doing a big implementation for a customer coming up in the next 30 days and am EXTREMELY confident that this SAN is the absolute perfect fit…

If you give me your e-mail address I cant ship you off some pics!

Cheers,

Stephen

Hi…

We have recently invested in one of these units.

I have updated the FW and made the CLI command to change the SPF ports over to iSCSi.

im trying to connect used HP DA cables (X242) to various different (and old) switches which have 1GBE SPF+ ports but having little joy.

the switches i have tested are HP 1400-24G and HP 2810-24g, as well as a small Cisco Business switch (cant recall the model).

what im finding is that i can connect one controller to another and its comes up as 10GB.

i can also use the same cables X242 to connect the switches to each other they come up as 1GBE.

but i cant get any job between the SAN and the switches.

my suspecion is that we need newer switches.

at some point i want to introduce a 10GBE switch network, but for the time being 1GBE will be fine.

Cheers

C.

Hi Colin,

Just curious, did you try connecting the MSA to the switch before issuing the CLI commands?

In my case, I never had to go in to the CLI to configure the ports as iSCSI. I’m assuming it may have been set to autodetect…

What CLI command did you use? Is there a chance you hard coded it to 10G, maybe that’s why it’s not able to connect via SFP+ at 1gb?

>did you try connecting the MSA to the switch before issuing the CLI commands?

Yes indeed but it didn’t work and the only options i had under the host port config was to set a FC speed of 16/8/4.

after much digging i came across this page

http://h20564.www2.hp.com/hpsc/doc/public/display?docId=mmr_kc-0119653&sp4ts.oid=5386548

what it says is that to change from only FC mode you have to have firmware XXX or above (which we had) and you need to run a couple of commands.

if you want only iSCSI then you run

# set host-port-mode iSCSI

If you want a mic of FC and iSCSI then you run

# set host-port-mode FC-and-iSCSI

After a restart you then see different configuration options int he Host Port configuration for IP addressing and the like.

i’m fairy sure my issue is the DAC cables i’m using.

they are HP X242

http://www8.hp.com/h20195/v2/GetDocument.aspx?docname=c04168382

i have a feeling they are not playing nicely with my SAN/Switchs.

i have got a pack of 1GBE to RJ45 HP MSA transceivers on order and will report back on the result.

Oh, did you update to the absolute LATEST firmware? That could also have an effect.

The cables should be good, I don’t see that making a different, they should be compatible with devices on both sides.

Just so you know the 1Gbe to RJ45 transceivers will work! I have 4 of these installed, however I do not use them.

Nice setup! And very interesting discussion. Can you send me the pics of the rear connected up too?

We are planning to purchase this SAN as well. We have 8 x DL380p G8 servers (2×10 cores and 128GB RAM) that we would like to connect to this new SAN and thinking of going with Fibre Channels…

What’s your input on this versus iSCSI

The 1GBE transceivers worked fine.

now i’m fighting an issue with my ESX hosts can see the SAN but none of the devices on it (volumes).

Where as a windows box has no issues.

On the san the Initiator remains in us-discovered ?

Hi Colin,

Have you created any volumes on the unit yet? If so what type?

Also, when you connect the iSCSI initiator to the MSA2040, what does it report, does it show disks, or anything?

in the end i rolled a new clean 5.5 ESXi and it worked off the bat.

it was part of my plan to re-roll the production hosts anyway so this would seem like a good time.

Current testing with a single VM managed to get around 12K random IOPS which is an improvement of 11K over our current SAN.

today we tested the tolerance of the unit.

So the test host has two nics dedicated to iSCSI.

We set them up according to this blog

http://buildvirtual.net/configuring-software-iscsi-port-binding-on-esxi-5/

Each nic goes to a separate switch and from each switch there are two cables one to each controller on the SAN.

while running an RDP to a VM where were able to disconnect each of the switches in turn, we also then physically pulled out each controller one at a time.

The RDP to the VM remained up the whole time and we were able to browse / create folders to the volume mounted on the SAN.

we did see the odd pause but i suspect that was more due to the constant pulling out parts and what not.

very happy with the unit after the initial issues.

Hi

Can you post the link to the application that can connect to the management interface and update firmware? Can’t seem to find it on the support pages. It would be easier if I know what it is called. 😛

found it …

Online ROM Flash Component for Windows – HP MSA 10402040 Storage Arrays

Hi Stephen,

Would you mind emailing me an image of the cable connections on this configuration? This is really close to what we are planning for replacement of our existing SAN and 2 host configuration and I appreciate you sharing this level of detail.

What are your thoughts on using 15K SAS or a SSD in the raid caching and how that may affect the iops you experienced? I haven’t found much discussion on this array from real deployments and I appreciate you sharing the performance stats in your blog, really helpful.

Cheers

Dan

Hi,

I try to configure cluster failover but i get validation storage spaces persistent reservation error.

I have ms2040 with fibre channel + 2 controllers.

Os: windows sever 2012r2

2 nodes

I created 2 Luns one for data and the second for quorum.

Can you help me to find a solution

Hi Stephen,

I’m in a process of getting same solution for our environment and I’m a bit confused about something…

According to the documentation the SAN Controller is the same for FC and iSCSI, but after a call to HP, they mentioned something that there are 2 different controllers… Now I’m waiting for some tech to enlighten us, but anyway in the mean time, maybe you can add some opinion/knowledge too…

Btw… We are aiming for iSCSI, but the FC bundle offer we have is cheaper then iSCSI… So thought that I can get the FC bundle, ditch the SFP’s in a drawer and toast in the DAC cables for the price difference…

Any opinions/suggestions are welcome…

Best regards,

Alex R.

Hi Alex,

There are two controllers, but that’s the difference between the SAN, and the SAS model.

As far as the SAN model goes, it has SFP+ module ports that are to be used with certified HP SFP+ modules (modules are available inside of the QuickSpecs document). The SAN can be used with either DAC cables, FC SFP+ modules, iSCSI SFP+ modules, or the 1GB RJ45 SFP+ modules.

You should be fine doing what you mentioned…

In my case, we use DAC. I also just configured and sold the same configuration as I have to a client of mine which is working GREAT!

Let me know if you have any specific questions…. If the unit you purchase is pre-configured, there is a chance you may have to log in to the CLI and change the ports from FC to iSCSI, but this is well documented and appears easy to do. But I wouldn’t be surprised if it just worked out of the box for you.

Cheers,

Stephen

Stephen,

When you said, “Although the host ports can sit on the same subnets, it is best practice to use multiple subnets.” what you did was give an different subnet for each port in both controllers?

I’m planning one the implementation of one solution like yours but with one more ESXi Host.

Best Regards

BM

Hi Bruno,

With 2 hosts (and 2 connections to each host), all host connections equal 4. Each of the 4 host connections are on different sub nets.

Stephen

Hello,

I am a french system administrator, article of your blog on “HP MSA 2040 – Double SAN controller” is great!

I do not understand English well, is it possible to have a picture of the connection between the SAN and HP ProLiant please?

This picture would make me a lot of time in my research.

Thank you in advance!

Hi Stephen,

article of your blog on is great and fantastic,

Can u share us back side connection diagram

recently we purchasesd below mentioned items didnt configured still in packet .please provide some valuable suggestions for setup this environemnt.

Hp MSA 1040 2prt FC DC LFF storge quantity -1

Hp MSA 4TB 6G SAS Qauantity 10

HP 81Q PCI-e FC HBA Quantity 4

HP 5m Multi Mode OM3 50/125um LC/LC 8GB FC and 10 GBE Laser enhanced cable pack – quantity 4

Hp MSA 2040 8gb SW FC SFP 4pk quantity 1

DL 380 G8 server quantity 2

please provide brief idea about setup this one.

Thanks in advance

Hi Stephen,

This post is great and I am confident that it will help us in our implementation…if we can only find the correct bloody DAC cables!!! Would you mind also emailing me some pictures of the rear of the SAN and Servers, and even better would be to know the exact model of the cables you used.

Thanks in advance.

Greg

Hi Greg,

Feel free to send me off an e-mail and I’ll send pics of the DAC connectivity…

As for the SFP+ DAC cables, I used:

487655-B21

I also recently deployed a similar (updated with Gen9 Servers) solutions for a client using this part for the DAC:

JD097C

Cheers,

Stephen

As Requested!!! 🙂

Video of connection to Server Hosts:

https://www.youtube.com/watch?v=6vpBz0p1iMM

MSA2040 Connection:

How can we connect MSA 2040 SAN storage to HP C7000 enclosure with 6125X/XG switches with SFP+ DAC cables.

Nice config!

Question: If you have two servers connected to the MSA 2040 via SAS or FC, would both servers be able to see the same volume on the MSA?

Hi Florian,

They would, and they do (in my case, and other cases where people are using VMWare), but you have to keep in mind that in any SAN environment or Shared Storage Environment that you are using a filesystem that would be considered a clustered filesystem.

Some filesystems do not allow access by multiple hosts because they were not designed to handle this, this can cause corruption. Filesystems like VMFS are designed to be clustered filesystems in the fact they can be accessed by multiple hosts.

Hope this answers your question!

Cheers,

Stephen

Hi Stephen,

excellent, many thanks for your quick reply!

Cheers,

Florian

Just thought I’d drop by to say, in case anyone is reading and about to buy an MSA 2040 to use with Direct Attach Cables, this is definitely *not* a supported configuration. Like Stephen I carefully checked all the Quickspecs docs for the NIC, for the Server, for the SAN before specifying one recently. However, though they are on the list, HP categorically do *not* support direct attached 10GbE iSCSI – regardless of whether you use fibre or DAC cables. This can be verified online using their SPOCK storage compatibility resource which lists all the qualified driver versions etc. – SPOCK has a separate column for “direct connect”. It’s pretty difficult to understand what difference this could make, but the problem is that if you deploy an unsupported solution and something went wrong they would just blame that. The mitigation in my case was to convert the SAN to Fibre Channel and buy two 82Q QLogic HBAs for the hosts. This is the only supported way to use the SAN without storage switching. Be warned!

Hi Patters,

In my case HP, and distribution did verify that using DAC connection to the SAN is a supported configuration. Different sources say otherwise, but HP ultimately did verify this as “supported”.

I’m wondering if the information just wasn’t put in to SPOCK.

Hello Stephen,

you write the following in one of your posts:

“For vMotion, each of my servers actually have a dual port 10G-BaseT NIC inside of them. I actually just connected a 3 foot Cat6 cable from server to server and dedicated it to vMotion. vMotion over 10Gb is awesome, it’s INCREDIBLY fast. When doing updates to my hosts, it moves 10-15VMs (each with 6-12GB of RAM allocated to each VM) to the other host in less then 40 seconds for all.

As for Storage vMotion, I have no complaints. It sure as heck beats my old configuration. I don’t have any numbers on storage vMotion, but it is fast!”

Are you really direct connecting the 2 10GB Hbas to each other with a simple Cat cable? Or are you using a switch (10GB) to connect?

Would be grateful for an answer.

Thank you

Franz

Hello Stephen,

you write the following in one of your posts:

“For vMotion, each of my servers actually have a dual port 10G-BaseT NIC inside of them. I actually just connected a 3 foot Cat6 cable from server to server and dedicated it to vMotion.”

Are you really direct connecting the 2 10GB Hbas to each other with a simple Cat cable? Or are you using a switch (10GB) to connect?

Would be grateful for an answer.

Thank you

Franz

Hi Franz,

As I mentioned, my servers had an extra 10G-BaseT NIC in both of them. Since I only have two physical hosts, I connected one port on each server to the other server using a short CAT6 cable.

So yes, I’m using a simple CAT cable to connect to the two NICs together. Once I did this, I configured the networking on the ESXi hosts, configured a separate subnet for that connection, and enabled vMotion over those NICs.

Keep in mind, I can do this because I only have 2 hosts. If I had more then 2 hosts, I would require a 10Gig switch.

Hope this helps!

Hi Stephen,

Congrats to your setup!

I’m a systems engineer at a distributor – one hint: you can purchase only the chassis and buy two controllers, than you’re not forced to buy transceiver at all – price of the components are the same. Make sure you have the latest fw (>GL200) to enable tiering and virtual pools. Then you can make smaller raid sets (3+1) and pool volumes across the raids, this will give you a huge bump in speed. Paired with a ssd read cache (can be only one ssd) this storage is nuts!

Hi we have a msa 2040 and which to add it to esxi 5.1 what guide did you use to add this SAN to your lab

We have just implemented a similar configuration with the HP MSA 2040 iSCSI connected to ESXi 5.1. In addition, we replicate the volumes to a HP MSA 1040 that was initially connected locally and then shipped off site to replicate over a 100Mb isec tunnel.

Moving the replica target controller was a bit tricky; but basically you have to detach the remote volume, remove the remote host and ship offsite. After reinstallation and validating all IP connectivity then you read the remote host and reattach the volume.

There is an interesting features the msa2040 but additional cost may needed.

Actually this entry level storage can actually compare with high end storage as this msa have also a features of tierring. Means you can seperate your high io pricesses to low io process uning perf tierring but it required ssd hard disk.. to activate this feacture you need to purchase licesne for tiering.

Hi! Have you upgraded your MSA 2040 to the latest firmware? it brings new virtualization features!

Hi Ricardo,

Yes! I was actually invited to the special program that HP had and had the firmware before it was released to the general public!

Pretty cool stuff!

Cheers,

Stephen

I also just purchased the msa2040 (SAS version) for our Vsphere 5.5 environment. How exactly did you update the drive firmware. There is a critical firmware upgrade for the SSD drives I loaded, but I can’t get it to run.

I tried installing the HP Management agents for Vcenter Client plug in, but that can’t even detect teh existing firmware on the disks.

When I try to upload the CP026705.vmexe file via the web interface for the controller, it loads the file to the disks but then fails the update.

6 of 6 disks failed to load. (error code = -210)

CAPI error: An error was encountered updating disks.

*** Code Load Fail. Failed to successfully update disk firmware. ***

Hi Kevin,

Not familiar with the SAS version, does it have an ethernet port for management, or does everything have to be done over the SAS connection?

If it has a web based interface, I would use that to update the various firmware for the unit. Make sure when updating firmware on the disks that you aren’t performing any I/O (don’t even have the datastores mounted).

Hi Stephen,

I am looking at pretty much doing the same thing that you did, but am having a bit of a confusing time trying to figure out if the 4 1GB ISCSI SFP+ modules are RJ-45 or fiber? In your pictures they look like they are RJ-45, which is what I am actually looking for at this time, but am not for sure of the part number for the 4-pack of SFP+ 1GB RJ-45 modules. Are these in fact RJ-45 modules? And if so, could you tell me the part number that you ordered to get them? Thanks

Paul,

In my config they are indeed RJ-45 1GB modules! 🙂

The Part# I used for the 4-pack of modules is: C8S75A

Please note, that some descriptions I noticed were wrong for this item, but I can confirm that this part# at the time I ordered was for a 4-pack of 1Gb RJ-45 SFP+ modules.

Cheers!

Hi Stephen,

does your setup include any switches between NIC and SAN Controller? I’m currently trying to order a very similar setup, and everyone keeps telling me I need a stack of SFP+ switches between NIC and SAN.

Hi Roger,

Nope, I have no switches. the 2 servers are connected directly to the SAN using DAC SFP+ cables, and it’s working great!

Stephen

I read your blog on MSA 2040 and would need assistance on setting up a similar setup.

I would like pictures of the set up with the 10gb DAC cables. And also pictures of the SFP ports and the connection ports. Thank you.

Can you tell me what model of 10gb sfp+ adaptors you purchased for your servers?

Hi Jay,

The unit I ordered I selected the 1Gb RJ45+ Ethernet SFP+ modules, but I don’t use them.

To connect to the hosts, I used 10Gb DAC Cables which have SFP+ modules built in to the cables on both ends.

Cheers

Hi, I found your article really useful and we have just purchased the same setup. I have the 2x 4 channel controllers with SFP+ connectors and did the same with the 1m DAC cables but the controller says its incompatible.

When i configure the controller it says the type is FC. I checked the firmware and I’m on GLS201R004 which I believe to be the latests.

Any ideas what I’m doing wrong?

Hi Alex,

Did you go to HP’s website and confirm it is the latest firmware?

You may have to go in to the web management interface and change those ports from FC, to iSCSI. I’m thinking this is probably the problem.

Were you originally using FC on the device?

Cheers,

Stephen

In reply to my previous comment. I used the host-port-mode iscsi command via telnet to change the compatibility of the ports from FC to iSCSI

Not obvious at all, and not something you can change in the gui. Thought it was worth sharing.

Alex

Hi Alex,

Is it working now after going in through telnet and changing it?

Also, I remember in V2 of the GUI there is an area to change the port modes. Not sure if there is in the new V3 of the GUI as I haven’t looked around that much.

Let us know if that command fixed the issue for you!

Cheers

Hello Sir,

we have MSA 2040 with DC Poser Supplies, we have AC Source how we can connect the Storage to the Power Source.. Plz help…

Steven,

thanks for show the domo. If you have to do 3 VMware hosts. One the RJ-45 1GB modules, they come in single or a pair? It seems like the MSA 2040 can have up to 4 MSA 2040 per controller.

“To connect to the hosts, I used 10Gb DAC Cables which have SFP+ modules built in to the cables on both ends.”

So you did brought an adapter card that has SFP there for you ended up connecting your VMware hosts with 10GB SFPs. can you please post picture of the back where the connections of the SFP the setup?

Is it true that your setup is like http://h20195.www2.hp.com/V2/GetPDF.aspx%2F4AA4-7060ENW.pdf page 5 figure 2 without the HP 16GB SAN switch (FC)?

would it be VMware host HP ENET 10GB 2-PORT 530SFP ADAPTER

MSA 2040 controller A or B C8R25A HP MSA 2040 10Gb RJ-45 iSCSI SFP+ 4-Pack Transceiver

Thanks much.

Hello Jon,

Please see the post: http://www.stephenwagner.com/?p=925 for pictures of the connection.

The RJ-45 1Gb SFP+ modules come in a 4-pack when ordered. My unit has 4 of them, but I don’t use them. When I ordered, it was mandatory to order a 4-pack, so that’s the only reason why I have them. I don’t use them, they are only installed in the unit so I don’t lose them, lol.

I used the 10Gb SFP+ DAC (Direct Attach Cables) to connect the servers to the SAN. The Servers have SFP+ NICs installed. Please see the pictures from the post above.

The document you posted above is to HP’s best practice guide. I used that guide as a reference, but configured my own configuration. I did NOT use fiberchannel, I used iSCSI instead and utilized the DAC cables. You cannot use the DAC cables with FC.

Please note that if you use the RJ45 1Gb modules, they will not connect to a NIC in the server that has a SFP+ connection, the RJ45 modules use standard RJ45 cables. Also, that card you mentioned uses SFP, and not SFP+. You’ll need a SFP+ NIC.

In my configuration I used iSCSI with:

HP Ethernet 10Gb 2-port 560SFP+ Adapter (665249-B21)

HP BladeSystem c-Class Small Form-Factor Pluggable 3m 10GbE Copper Cable (487655-B21)

You keep referencing SFP. Please note that the SAN or my NICs don’t use SFP, instead they use SFP+.

Hope this helps,

Stephen

Hi Stephen,

Thanks for you quick reply. I have to say your build is the most cost efficient.

And allow me to recap to make sure I understand your setup.

Host(Server side)

2 X HP Ethernet 10Gb 2-port 560SFP+ Adapter (665249-B21) to A and B controller. This adapter has 2 ports with connectors that will fit 665249-B21 cable. or the shorter one 487652-B21.

Connector: HP Ethernet 10Gb 2-port 560SFP+ Adapter (665249-B21)

Missing or my question is : The SAN doesn’t come with any SFP+, unless you order them as options.

Trying to answering my own questions: Stephen yours is a For MSA 2040

10Gb iSCSI configuration user can use DAC cables instead of SFPs. This is confirmed by reading your post over and over, you said “Not familiar with the SAS version”

Here is where I found SAS and iSCSI difference: http://www8.hp.com/h20195/v2/GetDocument.aspx?docname=c04123144

”

Step 2b – SFPs

NOTE: MSA 2040 SAN Controller does not ship with any SFPs. MSA SAS controllers do not require SFP

modules. Customer must select one of the following SFP options. Each MSA 2040 SAN controller can be

configured with 2 or 4 SFPs. MSA SFPs are for use only with MSA 2040 SAN Controllers. For MSA 2040

10Gb iSCSI configuration user can use DAC cables instead of SFPs.

”

Indeed, the iSCSI version will be the cost effective way to go. Great job Stephen.

Please help me convince myself to do the same setup as I was trying to do the following setup. Anyone welcome to jump in.

3 HP G8/G9 servers. Just 4 drive enclosure, this is only going to host 1 ESXi 5.5.

3 HP HP Ethernet 10Gb 2-port 530T Adapter 657128-001 (2 port RJ-45, so I can use these server with RJ-45 switch… leaving myself with option for later.)

Here comes the problem,

MSA 2040 SAS or iSCSI version, for the RJ-45 connector lover like me there is no other options to get the connection to be faster than HPE MSA 2040 1Gb RJ-45 iSCSI Google a lot but can’t find something like HPE MSA 2040 10Gb RJ-45 iSCSI.

Has anyone seems to come across HPE MSA 2040 10Gb RJ-45 iSCSI.??

Kevin,

How did you physically connect yours (the SAS version)?

Thanks all.

Jon

Hi Jon,

Each server has 1 NIC for SAN connectivity, but each NIC has 2 interfaces… The 560SFP+ has two DAC cables running to the SAN (1 cable to the top controller, and 1 cable to the bottom for redundancy). You need to make sure you use a “supported” cable from HPe for it to work (supported cables are inside of the spec sheets, or you can reference this post, or other posts which have the numbers.

The cables I’m using are terminated with SFP+ modules on both ends (they are part of the cable). The SFP+ module ends slide in to the SFP+ modules in the NIC and in the SAN controller.

And that is correct. On CTO orders, it’s mandatory to order a 4-pack of SFP+ modules with some PART#s. That is why I have them. Keep in mind I don’t use the modules, since I’m using the SFP+ DAC cables.

I am familiar with the SAS version, but that is for SAS connectivity which isn’t mentioned inside of any of my posts. My configuration is using iSCSI. If you want to use iSCSI or Fiberchannel, you need to order the SAN. If you want to use SAS connectivity, you need to order the SAS MSA.

When you place an order for the SAN, you need to choose whether you want the MSA 2040 SAN, or the MSA 2040 SAS Enclosure. These are two separate units, that have different types of controllers. The SAS controllers only have SAS interfaces to connect to the host via SAS, whereas the SAN model, has converged network ports that can be used with fiberchannel or iSCSI.

In your proposed configuration, you cannot use the MSA 2040 SAS with any type of iSCSI connectivity. You require the MSA 2040 SAN to use iSCSI technologies.

You’re not very clear in your last post, but just so you know, the SAN does not support 10Gb RJ45 connectors (HP doesn’t have any 10Gb RJ45 modules). You must either use 10Gb iSCSI over fiber, 10Gb iSCSI over DAC SFP+, 1Gb RJ45, or Fiberchannel.

The reason why I used the SFP+ DAC cables was to achieve 10Gb speed connectivity using iSCSI. That is why I have to have the SFP+ NIC in the servers.

If you only want to use RJ45 cables, then you’ll be stuck using the 1Gb RJ45 SFP+ transceiver modules and stuck at 1Gb speed. If you want more speed you’ll need to use the DAC cables, fiber, or fiberchannel.

I hope this clears things up. 🙂

Cheers

Hi Stephen,

Thanks much again.

Yeah, I was originally trying to do this:

1 CISCO 16PORT 10G MANAGED SWITCH (8 10GB port RJ-45, 8 SFP+)

3 HP DL380 GEN9 E5-2640V3 SRV with 3 HP HP Ethernet 10Gb 2-port 530T Adapter 657128-001 (RJ-45)

HP MSA 2040 ES SAN DC LFF STOR K2R79WB.

BUT a search for 10Gb RJ45 SFP+ lead me to your site and I am just going to ditch the Cisco switch.

Thanks for you cost efficient build.

I think I am convinced to use

3 HP DL380 GEN9 E5-2640V3 SRV with HP Ethernet 10Gb 2-port 560SFP+ Adapter (665249-B21)

6 HP BladeSystem c-Class Small Form-Factor Pluggable 3m 10GbE Copper Cable (487655-B21)

1 HP MSA 2040 ES SAN DC LFF STOR K2R79WB with 10Gb iSCSI configuration.

Question, what MSA 2040 do you prefer? My requirement is only to run 10 Max virtual servers and 3 TB of usable storage for file serving.

HP MSA 2040 SAN DC w/ 4x200GB SFF SSD 6x900GB 10K SFF HDD 1 Performance Auto Tier LTU 6.2TB Bundle

(M0T60A)

Or

HP MSA 2040 ES SAN DC LFF STOR K2R79WB

Thanks much.

Jon

Morning Jon,

Technically you could use 10Gb SFP+ DAC cables to connect the SAN to the Switch, in which case you would then be able to use 10GBaseT (RJ45) to connect the servers to the switch. This is something I looked at and almost did, but chose not to just to keep costs down for the time being.

In my setup, when I do expand and add more physical hosts, I might either purchase a 10Gb switch that handles SFP+ or a combo of SFP+ and 10Gb RJ45 connections… This way it will give me some flexibility as far as connecting the hosts to the SAN… In this scenario, some hosts would be using SFP+ to connect to the switch, whereas other hosts would be able to connect via 10GBaseT RJ45…

If you do choose to use a 10Gb switch with DAC cables, just try to do some testing first. Technically this should work, but I haven’t talked to anyone who has done this, as most people use Fiber SFP+ modules to achieve this…

One day I might purchase a 10Gb switch, so I’ll give it a try and see if it can connect. The only considerations would be if the switch was compatible with the DAC cables, and if the SAN is compatible speaking to a switch via 10Gb DAC cables.

Getting back on topic… Since you only have 3 physical hosts, you definitely could just use 10Gb DAC SFP+ cables to connect directly to the SAN. Since each controller has 4 ports, this can give you direct connection access for up to 4 hosts using DAC cables (each host having two connections to the SAN for redundancy).

As for your final question… The solution you decide to choose should reflect your IO requirements. The first item you posted, utilizes SSD high performance drives (typically optimized for heavy IO load DB operations). This may be overkill if it’s just a couple standard VMs delivering services such as file sharing and Exchange for a small group of users.

The second item you posted is the LFF format (Large Form Factor disks). The disks are 3.5inch veruses the 2.5inch SFF format. Not only does this unit house less disks, but also may lower IO and performance.

I’m just curious, have you reached out to an HP Partner in your area? By working directly with an HP partner, they can help you design a configuration. Also they can build you a CTO (Configure to Order) which will contain exactly what you need, and nothing you don’t need.

The first PART# you mentioned may be overkill, while the second item you listed may not be enough.

This may also provide you with some extra pricing discounts depending on the situation, as HP Partners have access to quite a few incentives to help companies adopt HPe solutions.

Hope this helps and answers your question.

Let me know if I can help with anything else.

Cheers.

Good afternoon Stephen,

Thanks for you valuable suggestions.

And yes I just reached out to HP yesterday and established a connection with an account manager who has refer a HP solution architect/engineer to help me with a tailor my setup.

Here I am providing my existing Infrastructure, maybe as a case study 🙂

router->3560G (and servers has no RAID at all)->2960 (with 1g sfp)-clients (user with100MB link)

upgrade

method 1:

1 CISCO 16PORT 10G MANAGED SWITCH (8 10GB port RJ-45, 8 SFP+)

3 HP DL380 GEN9 E5-2640V3 SRV with 3 HP HP Ethernet 10Gb 2-port 530T Adapter 657128-001 (RJ-45)

HP MSA 2040 ES SAN DC LFF STOR K2R79WB.

problem: 1. Cisco switch seems to last forever but by design the switch is the single point of failure.

2. the 10GB connection is only within hosts and the bottleneck is at the 3560G switch then to

2960. Unless I get 2 1CISCO 16PORT 10G SWITCH -> 1 3560X -> 3560G->clients.

method 2: your method.

3 HP DL380 GEN9 E5-2640V3 SRV with HP Ethernet 10Gb 2-port 560SFP+ Adapter (665249-B21)

6 HP BladeSystem c-Class Small Form-Factor Pluggable 3m 10GbE Copper Cable (487655-B21)

1 HP MSA 2040 ES SAN DC LFF STOR K2R79WB with 10Gb iSCSI configuration.

Then do network upgrade later

Thanks again.

Jon

Hi Jon,

I would wait until you talk to an HPe Solution architect before choosing Part#s. They may have information or PART#s that aren’t easily discovered using online methods. Also, I’m guessing they will probably recommend a CTO (Configure to Order) type of product order.

Technically both methods you suggested would work, however you should test first to make sure the Cisco switches accept the SFP+ cables. Originally I wanted to use switches, however I decided not to, to keep costs at a minimum. If you go this route, you could actually connect all 8 X SFP+ on the SAN to the switch for redundancy and increased performance. You could also add a second switch and balance half the connects from each controller to switch 1 and switch 2 for redundancy.

Also, I’m curious as to why you chose the LFF version of the SAN? I would recommend using a SFF (2.5 inch disk version) of the SAN for actual production workloads… I normally only use LFF (3.5 inch disk) configurations for archival or backup purposes. This is my own opinion, but I actually find the reliability and lifespan of the SFF disks to be greater then LFF.

As I mentioned before, the SFF version of the SAN holds 24 disks instead of the 12 disks the LFF version holds. Also you will see increased performance as well since disk count is higher, and the small disks generally perform better.

Cheers,

Stephen

Stephen

do you use linear or virtual volumes?

We currently have linear volumes, and wanted to enable snapshots, but I’m worried about the amount of I/O the copy-on-write function might have on it.

And I’m slightly perplexed why I seem to see reviews showing that virtual volumes don’t support schedule snapshots!! Only manual. Uh?

Any contribution you have to this welcome………

Hi Stephen,

am back in with 3 HP DL380 GEN9 E5-2640V3 SRV with 3 HP HP Ethernet 10Gb 2-port 530T Adapter 657128-001 (RJ-45)

HP MSA 2040 ES SAN in hand. Choose to use DAC cables.

As for the following quoting from you how many IPs did you use? 15? I agree I should use 2 subnet, 1 subnet for hosts and guest(for user to access and hosting vmservers) then subnet for storage (only the VMware host and storage). I first draft is not with good practice. Please let me know what you think. Also on do you have virtual server in different VLAN, and a DHCP server serving different VLAN? PortChanel? I am interested to see how to connect your ESXi hosts to your switch.

I used (joint a total of 36 IPs could have use a few less.

HPMSA

Controller A port 1 10.3.54.10

Controller A port 2 10.3.54.11

Controller A port 3 10.3.54.12

Controller A port 4 10.3.54.13

Controller B port 1 10.3.54.14

Controller B port 2 10.3.54.15

Controller B port 3 10.3.54.16

Controller B port 4 10.3.54.17

Management port 10.3.54.18

VServer 1

10 GB port 1 10.3.54.19

10 GB port 2 10.3.54.20

1 GB port 1 10.3.54.21

1 GB port 2 10.3.54.22

1 GB port 3 10.3.54.23

Management port 10.3.54.24

VServer 2

10 GB port 1 10.3.54.25

10 GB port 2 10.3.54.26

1 GB port 1 10.3.54.27

1 GB port 2 10.3.54.28

1 GB port 3 10.3.54.29

Management port 10.3.54.30

VServer 3

10 GB port 1 10.3.54.31

10 GB port 2 10.3.54.32

1 GB port 1 10.3.54.33

1 GB port 2 10.3.54.34

1 GB port 3 10.3.54.35

Management port 10.3.54.36

”

I used the wizards available to first configure the actually storage, and then provisioning and mapping to the hosts. When deploying a SAN, you should always write down and create a map of your Storage area Network topology. It helps when it comes time to configure, and really helps with reducing mistakes in the configuration. I quickly jaunted down the IP configuration for the various ports on each controller, the IPs I was going to assign to the NICs on the servers, and drew out a quick diagram as to how things will connect.

“

OH,

Stephen, since you use DAC cable, host to storage, would it be like you don’t have to have a VLAN that is visible, I mean the segment only gets seem by host to storage and the VMware software. so this VLAN doesn’t need to be know by other switches on the network as the it’s just itself.

Hi Darren,

I actually use linear volumes… I’m sorry but I can’t comment on the snapshot feature as I don’t use it. However, I do believe that it shouldn’t cause any performance issues or degradation.

I can’t really comment on the scheduled snapshot question. Sorry about that! 🙂

Stephen

Hi Jon,

I’m a little bit puzzled on your configuration. You mentioned you’re using DAC cables (which are SFP+ 10Gb cables), however you mentioned your server has RJ45 NICs. You can’t use SFP+ DAC cables with that NIC.

Your storage network, and VM communication networks should be separated.

In my configuration, each host has 1 10Gb DAC SFP+ cable going to each controller on the MSA2040 SAN. So each host has two connections to the SAN (via Controller A and Controller B). This provides redundancy.

Each connection to the SAN (a total of 4 since I have two physical hosts) is residing on it’s own subnet. This is because the DAC cables are going direct to the SAN, and are going through no switch fabric (no switches).

Your VM communication for normal networking should be separated. They shouldn’t be using the same subnets, and they shouldn’t be sitting on the same network if you do have your SAN going through switching fabric.

One last comment. If you do have one physical network for all the storage (example: both controllers of the SAN sitting on the same network, and the storage NICs of the physical hosts sitting on that same network), then you would use a single subnet, but that’s only if they are all on the same network, and each IP is available to all hosts/controllers).

If you aren’t using switching fabric and using DAC cables to directly connect the SAN to the hosts, you’ll have issues when the ESXi hosts try to detect possible paths to the SAN, if all connections are using the same subnet (the ESXi hosts will think there are multiple paths to the MSA, but it won’t be the case). This is why I used different subnets for each connection.

Furthermore, as I mentioned your VM communication should be separated from your storage network. In my case, my servers have multiple NICs, and I have all my VM traffic utilizing other NICs in the physical hosts. I have 1 NIC (dual port) dedicated to the SAN connection, while 2 other NICs (dual port 10Gb, and Quad port 1Gb) dedicated to different networks for VM communication.

I hope this helps.

Cheers,

Stephen

Hi Stephen,

I use DAC cables. What confuses me is that iSCSI with IP on the MSA, I thought iSCSI don’t uses IP?

Yes, I would like to have a separate VLAN for my storage, physical hosts each use 10Gb DAC SFP+ cables to connect directly to the SAN. So 6 of 10Gb DAC SFP+ and all 6 port Controller A and B will be on VLAN X. Then the rest of the 1 GB NICs on the 3 hosts are on VLAN Y and Z. But still working this out with my network admin.

Now since the VLAN X don’t really talk to Y and Z (am I right??). Does the VLAN X has to be created by the network admin? Or do I just whip up VLAN X and document it and call it a day?

Question for anyone who done DAC cable from host to MSA and using a switch (3560G) for client access (for those 4 GB ports on the HP host). Does anyone of you in favor of portchanel with trunk to get the host to have 2 (4)GB instead of 1 GB or 2 of 2GB portchanel with trunk? OR are you in favor of trunk for needed VLAN on all 4 ports and deal with the 4 ports with VMWare switch?

Thanks much.

Hi Jon,

I wouldn’t use VLANs unless you have a reason to. As I mentioned, in my configuration I have completely separate networks (attached to separate ports on the NICs).

The iSCSI technology uses IP. You may be confusing this with Fiberchannel (which I could be wrong since I have limited knowledge), which doesn’t use IP, unless you’re using FCoIP.

As I mentioned before, check your configuration, as the 10Gb adapters you mentioned use RJ45, they do not use SFP+ connections.

Cheers,

Stephen

Hi Stephen,

Thanks for you quick reply. “As I mentioned before, check your configuration, as the 10Gb adapters you mentioned use RJ45, they do not use SFP+ connections.” I have changed it. I pretty much have the exact same as you have but 3 host. I told HP that I want DAC connection.

Now I just have to find the wizard on the MSA to assign the IPs.

Thanks.

John Li

Hi John,

On the initial configuration wizard, it should have prompted.

If not, log in to the web GUI. Click on “System” on the left. Click the blue “Action” button, and then “Set Host Ports”. Inside of there, you can configure the iSCSI IP address settings. You can also enable Jumbo frames by going to the Advanced tab.

There is a chance that the unit’s converged network ports may be configured in FC channel mode. If this is the case, you’ll need to use the console to set and change the converged ports from FC to iSCSI.

Cheers,

Stephen

HI Stephen,

there isn’t ip setting in Host Port Settings.

it said:

FC Param, A1

Speed: auto

Connection Mode: point-to-point

Will e-mail you the pic. It seems like I am stuck at FC mode.

Thanks.

John Li

Hi Stephen,

Yea, just re read you post. need to use console to change to iSCSI mode.

Thanks.

Jon

Hi Stephen,

I have a MSA2040 SAS Dual 4xPort Controllers. We have two VMware Hosts already connected to the SAN and fully operational. I want to add a third but confused on what if any configuration I need to do on the SAN to present the LUNs to the third host. Is it as simple as connect the cable and re scan?

thanks in advance

Hi John,

It should be that easy! 🙂

While I’m not familiar with the SAS controllers (I only have experience with the SAN controller version of the MSA2040), it should essentially just be a matter of plugging in the 3rd host and doing the re-scan.

Let us know how you make out!

Cheers,

Stephen

THX for all the info sharing here !

im in the process of buikding a Hyper-V cluster with the MSA2040.

I was stuck at FC, but the setup should be iscsi.

Thx for your tip on converting the SAN to iscsi

Glad I could help!

Good luck, and excellent choice on the MSA 2040!

Cheers,

Stephen

Great article! I’m building a similar setup with an MAS 2040, two DL380’s and two ZyXEL XS1920 switches in a stacked configuration. I’ll be connecting the MSA 2040 to the ZyXEL switches using DAC cables. This gives me near 10Gbps line speed to the storage. The DL380’s will have a 10GBase-T NIC’s (FlexFabric 10Gb 2P 533FLR-T Adptr). The ethernet NICs will be lower bandwidth (I believe I should get approx 7Gbps line speed) than DAC but using the ZyXEL switches I can now connect more servers via 10GBase-T ethernet and still maintain dual paths to any new clusters I create.

Hello Everyone

I want to use two HPE MSA 2040 SAN Storage due i want to replicate there data and as soon as any one down i can easily connect the other and be available to the application server, so i thinking to installing windows server 2012 r2 on each of them to use the DFS Replication

Is that method to offer replication is the best and lowest cost or there is more options that use windows server 2012 r2 cause you know i have to purchase a license.

Hello,

Is it possible to use msa 10gbe without switch 10gb?

I have tested a lot of dac cables but always sfp is always “not supported” in msa.

Thanks a lot for your response.

Nicit

Hi Nicit,

Yes it is possible to use it without a switch with 10Gb. In my blog posts I explain that I’m using 10Gb DAC to connect to my servers (no switch on the storage side of things).

What DAC cables have you used? Are they HP branded?

You need to have the latest firmware. You also need to have the host ports configured in iSCSI mode (the unit ships with Fiberchannel as default).

Cheers,

Stephen

Hi Stephen,

I have two types of cables.

I have HP J9283B direct attach cables and 487655-B21.

I have upgraded the san to the last firmware but I haven’t force the port to iscsi …

Hi Nicit,

J9283B and 487655-B21 should both work. I’m using the first PART# at a clients site, and I’m using the second PART# with my own equipment. I can verify both work.

Please log on to the console and use the command to set the ports as iSCSI instead of FC. Numerous other users have had to do this. Please report back on your success!

Cheers

Hi Stephen,

It’s works !

You’re my god today 🙂

HP is very bad … Why by default the san is only FC and not FC and iSCSI :((((

The support of HP is very poor in Europe!

Thanks a lot ! I send you a virtual beer !

Nicit

Hi Nicit,

Glad to hear I could help, and I’m happy it’s working for you!

Cheers

Your narrative was very illuminating! I found your web page while trying to solve this problem: My MSA 2040 has its host ports set for FC. How do you get them to change to iSCSI?

Hi Joe,

Simply log in to the console via SSH or telnet (or the console port), and use one of the following commands:

set host-port-mode iSCSI (configures all host ports to iSCSI)

set host-port-mode FC-and-iSCSI (configures first 2 ports to FC, and second two ports to iSCSI)

If you’re using all iSCSI, use the first command.

When issuing these commands, make sure that no I/O is being performed on the unit as it will stop all IO and also restart the controllers.

Cheers

Hey Stephen,

Nice write up curious about your above “set” commands. In the CLI, I’m not able to run those without an error (says “% Unrecognized command found at ‘^’ position.”).

I tried at the root and in system-view, neither worked.

Thanks,

Chris

Hi Chris,

Just curious, are you running the latest firmware? Earlier versions did not support iSCSI.

Stephen

Hi Stephen,

I have to install an MSA2040.

I’ve never used HP storage before. I used to work with IBM.

My question is about the DSM driver.

If we use Microsoft Windows 2012 host, will we need to install a multipath driver?

For IBM storage for example, we are provided with SDDSM software from IBM to manage multipath.

I’ve searched on google and did not found anywhere I could download it.

Thanks for your reply.

Hi Ndeye,

I can’t exactly answer your question… However, any special drivers you may require should be available on the HPe support website…

Please note, when searching for your model on the HPe support website, make sure you choose the right one. There are multiple MSA 2040 units (SAN, and SAS units).

Here is a link to the US site:

http://h20565.www2.hpe.com/portal/site/hpsc

When I checked my model on that site, I noticed there was drivers available for specific uses, however I’m not sure if I saw a DSM driver. There’s a chance that it may not be needed.

As always, you can also check the best practice documents which should explain everyting:

http://h20195.www2.hp.com/v2/getpdf.aspx/4AA4-6892ENW.pdf?ver=Rev%206

http://h20195.www2.hp.com/v2/GetPDF.aspx%2F4AA4-7060ENW.pdf (this document is vSphere specific).

I hope that helps!

Cheers

Thank you very much for your reply and the links provided 🙂

HP uses Microsoft MPIO drivers for multipathing, not IBM SDDSM drivers. Windows 2008 & 2012 come with MPIO drivers built in. For Vmware hosts you need to download the Linux MPIO drivers from the HP site.

Please excuse the potential dumb question…

Could I take a msa 2040 chassis, add 2 controllers but only buy 1 pack of 4x transievers and plug 2 transievers into each controller?

I only intend to connect 2 servers to the arrays so buying 2 packs of transievers would be a waste of money when I only intend to use 4 of them!

Hi Antonio,

No such thing as a dumb question! When it comes to spending money on this stuff, it’s always good to ask before spending!

To answer your question: Yes, if you buy only 1 x 4 pack of transceivers, you can load 2 in to Controller A, and 2 in to Controller B.

When I purchased my unit, I ordered only 1 x 4-pack (as it was mandatory to buy at least 1 4-pack in CTO configurations). When I received it from HPe, they had 2 loaded in to each controller.

Let me know if you have any other questions!

Hi Stephen

NIce to write and read you!

Please ypur help.

In SMU reference guide, For firmware release G220 :

Using the Configuration Wizard: Port configuration:……….

Say:

To configure iSCSI ports

1. Set the port-specific options:

IP Address. For IPv4 or IPv6, the port IP address. For corresponding ports in each controller, assign one port to one subnet and the other port to a second subnet. Ensure that each iSCSI host port in the storage system is assigned a different IP address. For example, in a system using IPv4:

– Controller A port 3: 10.10.10.100

– Controller A port 4: 10.11.10.120

– Controller B port 3: 10.10.10.110

– Controller B port 4: 10.11.10.130

Well, I see that each port by controller has a different network segment. Supposing we have a SAN iSCSI with two servers and two dedicated LAN switch. The servers has two NICs each one. Each NIC must to have a different segment according the port in each controller?….How does Windows Server 2012 R2 function with that configuration?

Thanks and regards.

Martin

Hi Martin,

That is correct. When setting this up, you have options as to how the network is configured that the SAN sits on, as well as connectivity.

It’s good practice to use redundant/separate physical networks on different segments using different switches, this provides redundancy in the event that a switch, controller, or host adapter fails or goes offline.

While this isn’t necessary for configuration, it’s up to you as to how you’d like to configure the network (using multiple switches, different subnets, network segments, etc…).

For the sake of being open, I haven’t used a Windows Server to connect to the SAN, however it should be pretty straight forward.

If you do choose to use multiple subnets (different network segments) as well as 2 switches, you’ll need to make sure that the servers host adapters are setup accordingly with an IP on the segment network that it’s connected to.

On the Windows Servers, MAKE SURE you do not configure a gateway on the adapters configuration as this could cause problems.

Using the configuration example you posted above:

– Controller A port 3: 10.10.10.100

– Controller A port 4: 10.11.10.120

– Controller B port 3: 10.10.10.110

– Controller B port 4: 10.11.10.130

Example of host configuration if you choose to use multiple subnets:

-Server 1

–Host Adapter 1: 10.10.10.1 (connected to switch connected to A3 and B3)

–Host Adapter 2: 10.11.10.1 (connected to switch connected to A4 and B4)

-Server 2

–Host Adapter 1: 10.10.10.2 (connected to switch connected to A3 and B3)

–Host Adapter 2: 10.11.10.2 (connected to switch connected to A4 and B4)

After setup this way, and after you configure MPIO access from the servers to the SAN, it should be working. Each host will have separate physical, redundant links to the SAN.

Hope this helps! Let me know if you have any other questions…

Cheers

Hi,

I configure my MSA 2040 and my c3000 HPE blade server (460c Gen8) like:

Blade Bay3: 172.16.10.111 and 121

Blade Bay3 SCSI: 172.16.110.111

I did the ISCSI between SAN and Blade server on network 110 (by the interconnect) is up and running.

On my Blade, i installed a Win 2016 Datacenter to install Hyper-V later.

Does somebody know how do i create the iscsi between the win 2016 and the storage on the SAN ?

Regards

Hi Rene,

I’m not that familiar with Hyper-V and blades, however: First and foremost, I would recommend creating separate arrays/volumes for the blade Host operating systems, and your virtual machine storage datastore. The idea of having the host blade OS’s and guest VM’s stored in the same datastore does not sit well with me. The space provided for the Hyper-V hypervisor should be minimized to only what is required.

Second, you’ll need to configure your blade networking so that the Host OS Hyper-V has access to the same physical network and subnet as the MSA SAN resides on. Once this is done and the host OS is configured, you should be able to provide the Host Hyper-V OS with access to the SAN.

Please note, as with all cluster (multiple host access) enabled filesystems, make sure you take care as for following the guidelines that Microsoft has laid out for multiple initiator access to the filesystem to avoid corruption due to mis-configuration.

I hope this helps! Hopefully someone with specific knowledge can chime in and add on what I said, or make any corrections to my statements if needed.

Stephen

Nice writeup.. Just an FYI, for smaller setups you’re even better off getting the SAS based controllers. Performance is just as fast at 12Gbit and it is MUCH easier and especially cheaper to setup. Only limitation is that you can add a max total of 8 hosts (or actually 4 as you want to connect them redundant). This is usually enough as most SMB setus use vSphere Essentials/Plus that has a 3-host limit.

The hosts only need cheap dual SAS HBA’s instead of the very expensive 10Gbit NICs

Greetings Stephen,

I have MSA2040 controllers (A and B) both controllers have 4 iSCSI ports each. I want to ass assign apply NIC bonding on these controllers. such as Controller A will have single IP for all 4 iSCSI ports and same goes for controller B.

Please advise.

Hi Waqar,

I’m sorry but the MSA2040 does not support NIC bonding. Even if it did, I would generally recommend against using NIC bonding for iSCSI SANs.

You should be using MPIO, which will provide increased performance.

Hi Stephen,

We have just purchased an MSA 2042 and came across your blog entries for the model.

Having seen a number of discussions relating to disk group configurations, we were wondering If you’d kindly answer a couple of questions we have?

The MSA 2042 will be linked via 6Gbps SAS cables, to 2 Hyper-V hosts, which are clustered.

As the MSA isn’t in production yet, we are playing around with configurations, and also trying to following HP best practices.

Ok..

Along with the standard 2 SSDs which come with the unit, we have also purchased:

– 1 x 400GB SSD disk

– 12 X 900GB 10k disks

We’ve read about the awesome tiering that the MSA does, but now we’re playing with different scenarios I think we’re a bit confused with the disk groups and volumes.

Our original plan was to have the 3 SSDs in a RAID-5 group (hence the purchase of 1 more SSD), with the 12 X 900Gb disks in a RAID-10 group.

HP state that best practice dictates that disk groups should be balanced across both controllers for best performance. This would mean:

– Splitting our 12x 900GB drives into 2 disk groups

– Purchasing one more SSD to create 2 SSD disk groups.

One of each type of group would then be assigned to ‘A’ (Controller A) or ‘B’ (Controller B).

We obviously don’t have 4 SSDs, and are also worried that splitting the 12 x 900GB disks would result in 2 disk groups with a reduced spindle count (contrasted against one disk group with ALL of the 12 x 900GB disk), therefore immediately introducing a reduction in IOPs.

With this in mind, we are left with the following choices:

– Create one disk group containing all 3 SSDs, in a RAID-5 configuration

– Create one disk group containing all of the 900GB disks, in a RAID 10 configuration

This would mean the SSD group sits with controller A, and the 900GB group sits with controller B.

Once the disk groups have been created, we would then have to create the volumes to sit across the top. As we have two disk groups, this would mean a total of two volumes. My question then is, once volumes have been mapped to both hosts, each host would see 2 separate volumes. Surely the idea is that the hosts see once large disk within Windows? We don’t want to choose whether a VM sits on disk A (say SSD) or disk B (900GB drives) – we want to dump files on a volume and have the MSA tier accordingly based on access.

This has then caused us to ask whether we should create one disk group comprising of all disks (SSD & standard 900GB), with one volume strapped across the top, which then gets mapped to each host? This would mean that only one controller would be utilised – wouldn’t it?

Any help would be much appreciated.

Hi J Howard,

Thanks for reaching out.

That is correct that HPe recommends disk groups to span across both controllers (this is so that you can spread the I/O load across multiple controllers which helps reduce bottlenecks).

Keep in mind that each controller is it’s own “Storage Pool”, and inside of these “Storage Pool”s are disk groups.

In order for tiering to work, the SSD cache disk group needs to reside and be owned by the same controller that owns the traditional disk group (the disk groups for both the RAID 10, and SSD disk groups need to be in the same storage pool).

You asked about having the RAID 10 array on A, and SSD on B. This won’t work as they need to be in the same pool and owned by the same controller for the automatic tiering to function. You also mentioned spanning across multiple volumes, this also won’t work as if you did this tiering wouldn’t be active and wouldn’t function, the volume on the host would simply span two SAN volumes (no tiering), and you’d have no control as to what goes on what media.

Essentially, if you don’t purchase anything more and you want a single accessible volume, you’re game plan should be to create the RAID 10 disk volume disk group, and RAID 5 SSD volume disk group in the same storage pool (same controller) for the tiering to function. Your second controller will be idle, and won’t be used, but will be available in the event of a controller failure (even though the controller isn’t being utilized, if everything is configured properly it will be available for controller fail over).

If you did have additional funds in your budget, wanted to change your design, wanted 2 accessible volumes and wanted to utilize both controller for I/O, you could purchase more disks and SSDs. But in this case each storage pool (controller) will need to have it’s own disk groups. Each controller/storage pool will need it’s own traditional disk group, and it’s own SSD disk group.

I hope what I am saying is clear. Let me know if you have any questions or need me to explain anything in more detail.

Cheers,

Stephen

Hi Stephen,

Many thanks for the speed of your response.

Having spoken to HP yesterday, your comments fall in line with their advice. Our perception of tiering mechanics were a little off, and we now understand that both types of disk need to reside in the same pool for tiering to take effect.

It’s a tussle between having all disks in one group, owned by one controller, giving the maximum number of IOPS, and splitting the disks into two groups, with only one having the SSDs within the group. Whilst this means that both controllers are active, there will be a drop in IOPs, and there will be one group that only contains the 10k drives. That’s a decision we are yet to make. Your benchmark perf results look as though we needn’t worry, although you had more disks in the array if I recall.

Many thanks Stephen. I may return with more questions as we proceed with the setup of the unit.

J Howard.

Hi Stephen

We are planning to connect MSA 2040 with 2 controller to HP hp proliant dl380 gen9

Please advice us the best way to connect them.

Hi AOM,

There is no best way, it all depends on what you’re trying to accomplish, what you’re using it for, the type of redundancies you require, number of hosts, workload types, etc…

You’re solution architect should consider all of these factors. If you don’t have one, I’d recommend reaching out to an HPe partner in your country to recommend a configuration based on your requirements (I’d offer my services, but you’re in a different region).

Cheers,

Stephen